Knowing-through-Making in Chemistry and Biology:

A Study of Comparative Epistemology

Joachim Schummer*

Abstract: The epistemological idea according to which knowledge can and perhaps must be gained by the making of something new, has played a central role in Western philosophy for about two Millennia. While largely unknown in the theory-focused philosophy of physics/science prevailing nowadays, it was crucial for the development of both modern chemistry and biology, from synthetic chemistry to genetics and synthetic biology. Rather than discarding that as technology, or relabeling it as ‘techno-science’, this paper takes knowing-through-making as an epistemological principle of science proper and analyzes its role in the history of philosophy, chemistry, and biology up to the presence. It argues for comparative epistemology of science, here for a comparison between chemistry and biology, to develop a better understanding of the similarities and differences of the sciences, rather than lump them altogether and treat them according to one’s favorite discipline, which has usually been physics. Taking knowing-through-making seriously also requires a new integration in philosophy of science that includes epistemology (knowing), ontology (making something new), and ethics (the normative implications of changing the world).

Keywords: verum-factum principle, knowing-through-making, comparative epistemology, synthetic chemistry, synthetic biology, integrated philosophy of science.

1. Introduction

Nine decades ago, the American pragmatist John Dewey (1929, p. 29) ridiculed the prevailing "spectator theory" in epistemology/philosophy of science, according to which the works of scientists had persistently, and wrongly, been modeled after a passive spectator who looks at the objects of study from a distance, like an astronomer looking at the stars.[1] While Dewey himself became influential in educational matters, e.g., by establishing the Learning-by-doing or Hands-on approach, his impact on his fellow philosophers of science has been very limited. There has been some acknowledgment of experiments as theory testing, instrument using, or heuristic exploration, but still much seems to be modeled on vision, now equipped with some instruments. When it is undeniable that scientific knowledge is pursued by manipulating the objects of study, as for instance in particle physics or genetics, some philosophers use terms such as ‘technoscience’, originally introduced by French philosopher Bruno Latour (1987) to emphasize the social context of science, to denounce sciences that do not comply with the spectator view.

How would they respond to the claim that scientists also systematically employ the making of something anew for acquiring scientific knowledge? Would they just discard that as ‘mere technology’? Perhaps, but then we should better question if the received philosophy of science is familiar with contemporary science, technology, and philosophy (Schummer 1997b).

Knowing-through-making or KTM (better known as the verum-factum principle) has a long history of two millennia that philosophers might know better than philosophers of science, in some sense it is one of the oldest epistemological principle. It originally related knowledge of the world to the creation of the world, making knowledge a privilege of the creator god. Section 2 provides a sketch of that history and explains why it had almost been forgotten, before it was rediscovered in reflections about chemistry in the 19th century. Any good hands-on chemistry instruction illustrates the basic matter to pupils, including to philosophers: you can learn about chemical properties only by letting substances react with each other, which inevitably produces other substances, rather than by gazing at them from a distance with a telescope or looking glass. Chemistry went much further than that and developed theory-guided methods to analyze the molecular structure of about a million compounds by actually making them anew in the laboratory since the mid-19th century. Section 4 explains the different methods of KTM in chemistry after some conceptual distinctions are introduced in Section 3.

Biology is frequently said to have only recently followed chemistry’s 19th-century analytic/synthetic turn. However, Mendel’s cross-breeding experiments of plants, which are widely considered the foundation of modern genetics, were performed in the mid-19th century, at about the same time when chemistry developed its sophisticated knowing-though-making approaches. That example, as well the numerous methods for understanding physiological structure-function relationships, from organ damages to gene knock-outs, establish a rich history of KTM in biology, briefly sketched in Section 5. The more recent approaches to creating life from scratch, protocell research and synthetic genomics, frequently put under the common umbrella of ‘synthetic biology’, have made ambitious claims that their making enables new biological knowledge. By using the conceptual distinctions we will see that these, unlike former, claims are not uncontentious but instead would require more elaboration.

Knowing-through-making as an epistemological principle, which the physics-based philosophy of science has overlooked, establishes epistemological links both between the sciences of chemistry and biology and between the philosophy of chemistry and the philosophy of biology. That is much more productive than discussing scientific disciplines in terms of a reductionist hierarchy, an understandable human effort by physicists to base all the sciences on physics and all the philosophies of the sciences on philosophy of physics. In contrast, by identifying both common and different epistemological grounds between the disciplines, we can much better understand the potentials and obstacles of interdisciplinarity in science, which helps the sciences; and we can do this in collaboration between philosophers of the respective sciences. In sum, the following discussion of KTM is just one example of the still hardly existing comparative epistemology of the sciences, which aims at building bridges, rather than hierarchies, both between the sciences and between their respective philosophies.

Because the meaning of the English term ‘epistemology’ has considerably been transformed in the 20th century into various directions – unlike, for instance, French épistémologie, Spanish epistemología, Italian epistemologia, German Erkenntnistheorie, etc. – it might be useful to recall that ‘epistemology’ historically and in the following still means theory of knowledge, one of the main branches of modern philosophy. It belongs neither to philosophy of language, nor to mathematical logic, let alone neurophysiology; and it is not devoted to non-scientific knowledge, but focuses on what we consider the most advanced form of knowledge, i.e. now mostly scientific knowledge. Thus, epistemology is largely about the scientific ways of developing knowledge, which greatly differ among disciplines, depending on the kind of questions being asked, from historical questions such as in biological evolution or archeology to taxonomic and causal issues. We have ample information about how the quality, novelty, and relevance of scientific knowledge is being assessed across the sciences, i.e. by double blind peer review. However, we still know very little, at least from philosophers of science, about the actual methods scientists use to develop their knowledge, what epistemological principles they employ, each in their own discipline (except for theoretical physics, perhaps). Thus, we are only at the very beginning to explore comparative epistemology, because the notions of both cross-disciplinary comparison and epistemology has been neglected during the long-standing dominance by theoretical physics and its alleged logic. So, let us start anew from scratch.

2. Knowing-Through-Making in Philosophy

2.1 Ancient and early modern philosophy

The epistemological idea that true knowledge can (and perhaps even must) be gained by making things, plays a strange role in the history of Western philosophy. On the one hand, the idea seems to have just been taken for granted by many Christian philosophers. Authors assumed that God, by virtue of his Creation, has a unique and privileged epistemic access to nature. When the Italian philosopher Giambattista Vico (1668-1744) first explicitly stated the idea in Modern times as an epistemological principle ("the true and the made are convertible", Vico 2010, p. 17, usually called the verum-factum principle), right at the beginning of his book on On the Most Ancient Wisdom of the Italians (1710), the author claimed that it had already been uphold by all major ancient Latin (‘Italian’) philosophers, alas without providing names. On the other hand, in spite of the strong experimentalist tradition that began dominating science from the late 18th century on, the philosophy of science that emerged in the 20th century, has hardly ever mentioned the idea, as if it had no bearing whatsoever on the epistemology of the peculiar science they had in mind.

The historical role of the verum-factum principle explains its neglect and odd place in today’s philosophy. What we nowadays call ‘philosophy of science’ stands in the tradition of the early modern endeavor to establish the mechanical philosophy of nature (originally, rational mechanics or mixt mathematics) as the only true epistemic access to nature, by such diverse authors as Galilei, Descartes, Newton, and Kant, among many others. They did so by providing foundational arguments of religious, metaphysical, logical, or epistemological nature that delivered a message of epistemic optimism. By contrast, the verum-factum principle belonged to a (religious) tradition that rejected all such optimistic ambitions as false and vain. The pessimistic rejection, which was for many centuries prevailing in Christianity, goes back to the influential Jewish philosopher Philo of Alexandria (ca. 20 BC-50 AD). He argued that, because humans were not witnesses of the original Creation, they can never have any first-hand knowledge of nature, because that would require knowing how, why, and when something originally comes into being (Philo of Alexandria s.d., p. 136).[2] Christian Church Father Augustine (354-430) went even further and rejected human curiosity about nature altogether as vain (Confessions X.35). That included attempts to understand Nature via understanding the Creator God, an approach uphold by ancient Gnosticism that was only rediscovered in the Renaissance to incite the optimism. Thus, for most of Western philosophy, the verum-factum principle was used to support epistemic pessimism, which did not fit well with the epistemic optimism of our mechanical philosophers.

Vico’s 18th-century rediscovery of the verum-factum principle served him largely to support his own historiographical approach in his Scienza Nuova (1725), which was based on some kind of rational reconstruction of history rather than seeking for historical evidence.[3] That approach seemed to have strong, if only indirect, impact on German Idealism, not only on Hegel’s quite unique ‘dialectic’ history writing, which created history by rational construction, but already before on Kant.[4] Kant thought that Nature-as-such or things-in-themselves are indiscernible, thereby rejecting all direct empiricist approaches. However, he believed that scientists had recently developed a new epistemic way of understanding nature by manipulating the phenomena (he mentioned Galilei’s experiment of rolling balls on an inclined plane; Torricelli’s experiment on atmospheric pressure; and Stahl’s transformation of metals to lime and vice versa (Kant 1787, B XIIf.)). However, rather than developing the experimental method further, Kant applied the verum-factum principle only to metaphysics: "Let us then make the experiment whether we may not be more successful in metaphysics, if we assume that the objects must conform to our cognition." (ibid. B XVI). Kant’s so-called transcendental philosophy tried to establish the fundamentals of Newtonian mechanics and Euclidian Geometry as a priori truths, derived from how the human mind makes judgments independent of empirical content, what he called ‘synthetic judgments a priori’. Although the sciences soon rejected all that, by developing non-classical mechanics and geometry as more fundamental, his kind of intellectual constructivism (‘true is what pure reason makes’) became a model in philosophy.

In the Kantian approach to science, the actual objects of experimental enquiry remain indiscernible, at best they provide yes/no answers to experimental questions to the "appointed judge who compels the witnesses to answer questions which he has himself formulated" (ibid. B XIII). That kind of logical reduction of the experimental method, which Kant had borrowed from Francis Bacon, was dismissed a century later by German philosopher Friedrich Engels (1820-1895). Engels, who developed the natural philosophy part of what came to be known as Marxism, was well-informed about the scientific progresses of his time by his good friend, the chemist and historian of chemistry Carl Schorlemmer. Not surprisingly, he took chemistry as the foremost example to argue against Kantian agnosticism:

If we are able to prove the correctness of our conception of a natural process by making it ourselves, bringing it into being out of its conditions and making it serve our own purposes into the bargain, then there is an end to the Kantian ungraspable ‘thing-in-itself’. The chemical substances produced in the bodies of plants and animals remained just such ‘things-in-themselves’ until organic chemistry began to produce them one after another, whereupon the ‘thing-in-itself’ became a thing for us – as, for instance, alizarin, the coloring matter of the madder, which we no longer trouble to grow in the madder roots in the field, but produce much more cheaply and simply from coal tar. [Engels 1886]

For the first time, a philosopher recognized modern synthetic chemistry as a model case of ‘knowing-through-making’. But that would soon be forgotten.

2.2 20th-century philosophy of science

The field that in the 20th century came to be known as ‘philosophy of science’ had long been confined to reflections on theoretical physics and mathematics, where experiments at best play a role in confirmation. Logical Positivism, which dominated the field for many decades, tried to reformulate the theories of physics in terms of mathematical logic and to reduce experimentation to sense perception. For instance, Austrian-born Gustav Bergmann, a mathematician and lawyer by training, and member of the most influential Vienna Circle, who after his immigration in the US wondrously turned into an professor of philosophy and psychology, maintained that experimentation does not add anything fundamentally new to science. He argued that we could also remain spectators and wait until each of the experimental set-ups of science incidentally emerge in nature (Bergmann 1954, Schummer 1994). Bergmann responded to the operationism of physicist Percy Bridgman, who had received the Nobel Prize in Physics in 1946 and had long and convincingly argued that experimental operations, rather than sense perceptions as the Logical Positivists claimed, are crucial for the definition of scientific concepts and other epistemic goals. Although Bridgeman might have been influential on American pragmatism, particularly on John Dewy who argued against the ‘spectator’ and ‘armchair view’ of science (s. a.), his influence never turned into acknowledging KTM as an independent epistemological principle.

The only approach of the analytical philosophy of science worth mentioning is by Canadian philosopher Ian Hacking (1983). He argued that if the theoretically imagined manipulation of theoretical entities are successful, such as when elemental particles can be manipulated in an accelerator as it was predicted, we would have strong evidence for their reality. Entrenched in theoretical physics, Hacking and many of his contemporaries and followers did perhaps not realize that the same kind of argument for ‘entity realism’, as it was called then, could be equally applied in support of whatever form of magic healing; such as when the successful healing of some disease by placebo effect appears to prove the success, and thereby the reality, of some benevolent spirits. Had he ever looked at what chemists had been doing for more than a century on a grand scale, he would perhaps have developed an epistemology of physics based on KTM, rather than just provide a comment on the metaphysics of elemental particles.

Strangely enough, one of the most famous 20th-century theoretical physicists, Richard Feynman (1918-1988), is said to have uphold a strong version of KTM: "What I Cannot Create, I Do Not Understand".[5] That statement, if taken seriously as a general epistemological claim, would clearly undermine the epistemic status of astrophysics among many other fields of physics, including Feynman’s own one of quantum electrodynamics. It implies, for instance, that as long as no human has created a galaxy, a geological formations, or a quark, etc., there is no human understanding of these objects. It is uncertain if Feynman, and the physicists who are fond of quoting him, have been aware of the epistemological implication of this confession. At least there is no published philosophical record on that.

In sum, philosophers of science have largely disregarded experimental sciences like chemistry. With their focus on physical theories, experimentation was at best a service to confirm or reject theories, or some heuristic exploration preliminary to real science. Even though a famous theoretical physicist, such as Richard Feynman, is said to have considered KTM as his personal epistemological doctrine, that has left no traces whatsoever in the philosophy of science/physics.

3. Conceptual Distinctions

Before discussing KTM in chemistry and biology, it is useful to introduce some conceptual distinctions. As an epistemological principle, KTM generally claims that the making of something enables or improves the knowledge of this something or something closely related to it. We can usefully distinguish between strong and weak, hard and soft, as well as between non-trivial and trivial versions of KTM.

The strong version of KTM claims that the making of something is a necessary condition of knowing. Thus, whoever has not yet made X does not know X. The strong version has for most of the history been prevailing since Philo of Alexandria; it allowed pointing out a fundamental epistemic difference between the creator god and humans. However, also Vico, Kant, and others seem to have subscribed to the strong version for their specific field of inquiry. The Feynman quote "What I Cannot Create, I Do Not Understand" (s.a.) is just another, personalized form of the strong version. Vico’s phrase "verum et factum reciprocantur seu convertuntur" (s.a.), that the terms ‘true’ and ‘made’ are interchangeable or equivalent, even suggests that making is both a necessary and sufficient condition for knowing. In this strongest version of KTM, only the making, and any (!) kind of making, automatically provides knowledge, but that view seems be difficult to defend.

By contrast the weak version of KTM claims that the making of something is (under certain conditions) a sufficient way to develop (certain kinds of) knowledge (in certain fields). The three provisos in brackets above, along with a more precise definition of ‘making’ (see below), are crucial for distinguishing between different positions and focusing the subsequent discussion of KTM in chemistry and biology. Unlike the spectator view of knowledge that has been maintained for all of science, weak KTM allows for methodological pluralism in science (Schummer 2015).

Sciences substantially differ from each other in their epistemic approaches, such that methods in one field cannot easily be transferred to other fields. For instance, the experimental sciences that modify or create their objects in the laboratory should not be epistemologically confused with observational sciences, like astronomy, where the ‘making’ is, strictly speaking, confined to the making of observational apparatus, concepts, and theories. There might be ‘intellectually constructivist’ views claiming that the objects of, say, astronomy are conceptually formed and in this sense ‘made’, in accordance with Vico, Kant, and others. However that kind of ‘intellectually making’ (or better, interpreting) substantially differs from the experimental production of new material entities that never existed before and outside of a certain laboratory. The making of something, as understood here, is a material event in time and space, rather than a product of changing interpretations or views, such as when I change to green glasses everything appears green in my imagination.

‘Making’ or ‘creating’ something (hard version) also needs to be distinguished from ‘modifying’ something (soft version). If I let fall down or shoot a ball into a certain direction and measure its trajectory, that neither counts as making nor as modifying, other than as in ‘making an experiment’. Instead, ‘making’ or hard KTM refers to a material object and requires an ontological shift from one kind of entities to another one, like from bricks to a wall or a house, from a chemical substance to another one, or from a mixture to a new compound, structure, or even a living organism, or from one biological species to another one. In process ontology, that may also include a change from linear flows to new dynamical patterns and structures, or to the emergence of a novel kind of properties. What counts as an ontological shift depends on the current ontology of the respective discipline, rather than on ordinary knowledge that is usually based on modification claims that knowledge is developed from modifying something, from changing the mode or modification of something, like from liquid to gaseous water, or from the wild type to a newly-bred variation or a genetically modified form of an organism.

Science is usually not concerned with trivial knowledge that is automatically provided by any successful making of something: If I have made a brick wall, a clock, an organic substance, a biological cell, etc., (1) I know that I can make them (trivial know-that) and (2) I possibly know how to make them (trivial know-how). Trivial know-how claims are good for one’s lab notebook or a potential recipe; they do not make epistemic claims over and above the knowledge of making something at a certain point of time and space. That kind of knowledge might be important in some crafts, but it does not contribute as such to science that requires additional knowledge about regularities, general conditions, causes-effect relations, etc. As we will see below, however, trivial KTM sometimes pop-ups as metaphysical or quasi-religious claims in religious societies that worship the unique capacities of a creator-god. When we humans ‘know-that’ they we can also make organic substances, living beings, etc. in the laboratory, that might have an impact on our views about a creator god (and by that about the creations) – but that does not belong to science.

The following will focus on non-trivial KTM, i.e. knowledge that does not simply repeat the know-how and know-that of the making. Hence, by taking KTM as an epistemological principle in science, we require some extra-knowledge from KTM, over and above trivial know-how and trivial know-that.

4. Knowing Through Making in Chemistry

4.1 Historical background

Among all the sciences, chemistry, and even earlier alchemy, first started out to employ hard KTM in a positive epistemological manner, which goes back to its early history. According to Ortulanus, a mid-14th-century compiler and commentator of alchemical texts, the ‘Emerald Tablet’ (an influential Hermetic text that had long been considered to be foundational for alchemy but which perhaps originated only from 6th-century Arab authors), suggested to imitate God’s creation of the world in the laboratory as a way to achieve universal wisdom.[6] Imitating the Creator-God epistemologically encouraged the knowing-through-making approach, which was, however, at the same time theologically prohibited at different degrees in Islamic, Christian, and Jewish traditions because it suggested that the actors try to assume divine capacities, ‘to play God’.

Apart from that religious context, alchemical and early chemical (or ‘chymical’) work increasingly relied on operational criteria to define the identity of a substance. Whether a certain piece of matter is a sample of a certain kind of substance became increasingly dependent on its operational behavior. Earlier, some visual properties, its mining location, or some more or less secret laboratory operation had been used to identify a substance, with the exception of precious substance like gold for which operational criteria (e.g., the ‘touchstone’, specific weight) were established quite early for economic reasons. During the 18th-century, laboratory operations of defined synthesis from precursors, including purification techniques and the analytical decomposition from precursors, commonly known in early modern Latin as ‘solve et coagula’ (‘analyze and synthesize’), became standard technique for substance identification. That was usually combined with elemental analysis, which broke down any compound in its elemental quantitative composition, according to the understanding of elements of the respective time. In sum, the identity of any chemical substance was determined by making something, both by analysis and synthesis.

4.2 Logical connection

There is a logical reason, grounded in the subject matter of chemistry and its properties, why both hard and soft KTM became the dominant epistemological principles in chemistry. To begin with the soft version, if you want to determine, say, the boiling point of water, you need to boil it, i.e. to change its modification or aggregation state. However, at its core, chemistry is about chemical properties which are about how one or more chemical substances can react to form one or more other chemical substances (Schummer 1998). That is, all chemical properties are chemical reactivities, or more generally, changeabilities (which is beyond the received philosophy of science). Understanding chemical change requires hard KTM.

If X, R, and Y are chemical substances, a typical chemical phenomenon is the reaction of X with R to form Y, in short:

X + R –> Y.

Two types of related chemical properties can be derived from such a reaction:

Type 1: Y has the property of being formed from the reaction of X with R. This type corresponds to the trivial form of know-how: the successful making of Y proves that Y can be made from X and R.

Type 2: X has the property of reacting with R to form Y. This type is a changeability, the property of how a substance reacts to form another one.

Because changeabilities are systematically explored by laboratory operations of change, rather than by passive observation as the spectator theory would claim, chemical knowledge is logically connected to making new substances. Chemistry can thus harvest trivial how-how of X (type 1 property) for the development of non-trivial knowledge about Y (type 2 property).

There would be no systematical chemical knowledge on the experimental level which connects the various chemical substances with each other through reactions (Schummer 1998), including analysis and synthesis, without literally making new substances in the laboratory. The experimental making is neither a technological byproduct nor some theory-testing activity, as traditional views of science would see it (Schummer 1997b). It is the empirical starting point of the science of chemistry, like measuring the velocity of a moving body in mechanics. Hence, on the experimental level, chemistry is for logical reasons bound to the strong versions of KTM, i.e. exploring chemical reactions is a necessary condition of knowing chemical properties. Or, in other words, because possible reactions result in possible products, exploring the realm of possible new chemical substances is the proper activity of synethic chemistry as a science of possibilities (Schummer 1997b).

In general, the logical connection and thus the strong version of KTM holds for every experimental science that studies changeabilities because one first needs to explore the possible changes in order to know changeabilities more generally. If one looks only at the products of change, one might misleadingly confuse a science of changeabilities with technology and strong KTM with trivial know-how. That has been a widespread misunderstanding of chemistry, or of any science of changeabilities for that matter, not the least by mainstream philosophy of science (Schummer 1998). However, because of the logical relation between trivial know-how and changeabilities, as illustrated in the two types of properties above, both sides can mutually inform each other. Any systematic or theoretical knowledge of changeabilities informs know-how, and vice versa.

4.3 Structural analysis by step-wise synthesis

For most of the history of modern chemistry, chemical analysis, i.e. the determination of the elemental composition and molecular structure of a compound, followed strong KTM. Elemental composition was determined by decomposing a compound into its elemental components, i.e. by actually making the elements from the compound and then weighing them. Conservative chemistry journals still required such (‘wet chemical’) elemental analysis well into the second half of the 20th century. When the classical method was replaced by mass spectroscopy, the general approach did not substantially change: elemental analysis still works today by decomposing compounds into their elemental components (and other fragments) in the mass spectroscope and then measuring their inert masses.

In chemical structure theory, which emerged in the 1860, the changeabilities of organic substances are represented by functional groups and their possible rearrangements of molecular structures. For instance, the alcohol group, R-OH, represents among others the reactivity to form esters with carbon acids (R1-OH + R2-COOH –> R2-COOR1). Thus, rather than atoms, functional groups represent the sets of chemical reactivities characteristic of each substance group in organic chemistry. To every type of transformation on the substance level corresponds a structural rearrangement of functional groups on the molecular structure level, which in the 20th century became elaborated in the form of numerous ‘reaction mechanisms’ that depict structural change in terms of transient and intermediary states.

Chemists did not employ some magic microscope for the determination of molecular structures (as well as transient and intermediary states) but KTM. Before the instrumental revolution in chemistry in the mid-20th century (Morris 2002), which quickly established infrared, nuclear magnetic resonance, and mass spectroscopy as routine measurements (Schummer 2002), molecular structure was mostly elucidated by one of two ways. Both start with a guess to be confirmed by a series of controlled steps of chemical transformation.

In the analytical route, the substance in question is in small steps decomposed into substances of known molecular structure, such that each step can be represented on the molecular structure level in terms of standard transformations. In the synthetic route, which became an art in itself and a particularly prestigious field of chemistry, the substance in question is synthesized anew from structurally known compounds, originally from the elements, through a number of small steps. Again each synthetic step must be represented by a standard type of molecular rearrangements in terms of functional groups, such as the esterification in the example above. Eventually substance identity of the newly synthesized probe with the original one is established by comparing characteristic properties, or by simply mixing them and checking for thermodynamic changes, such as melting-point depression.

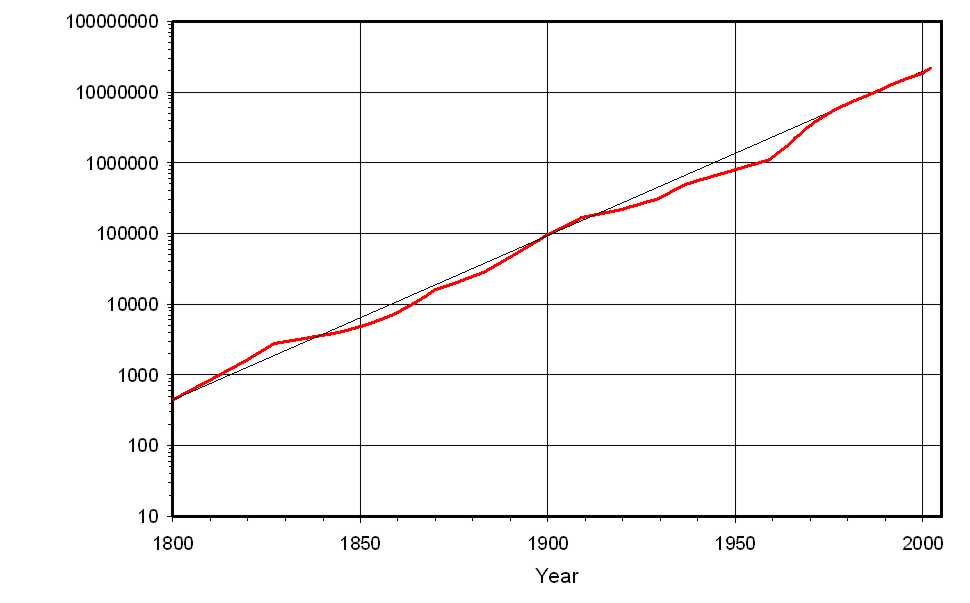

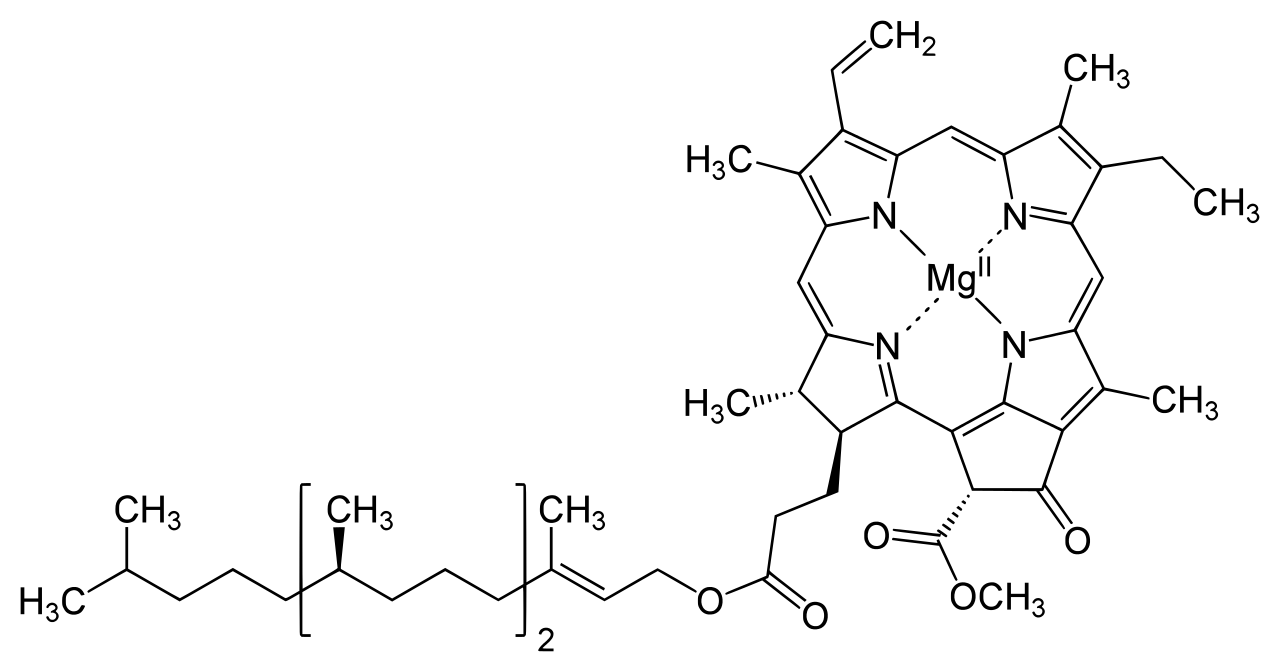

From simple substances, like ethers in the mid-19th-century, to sophisticated natural products, such as chlorophyll in the mid-20th-century, molecular structure analysis through chemical synthesis (or degradation) was the major approach to structural knowledge in chemistry for hundreds of thousands of substances (Figure 1). As a the German organic chemists Adolf Strecker put it already in 1854, "The artificial formation of substances in nature can be conceived as the goal that organic chemistry is striving for." (Schummer 2003) The French chemist and historian of chemistry Marcellin Berthelot went even further by calling his new textbook of chemistry Organic Chemistry Founded on Synthesis (Berthelot 1860). However, as much as chemical synthesis of organic substances contributed to scientific knowledge, it also served to disprove vitalism by experimental activity which seems to have continued for much of the 20th century (Schummer 2008). When the synthetic approach became gradually supplemented and eventually replaced by various spectroscopic methods and x-ray diffraction for crystals, chemistry only changed from strong KTM to weak KTM in molecular structure analysis.

a) a)

b) b)

Figure 1a. Growth of known substances 1800-2000 (red curve) compared to perfect exponential growth (black line) (data from Schummer 1997a). 1b: Molecular structure of chlorophyll.

That notwithstanding, knowing the molecular structure of an organic compound and knowing how to make it has become interchangeable, as in Vico’s original claim. Formal operational approaches of ‘retro-synthysis’ such as the ‘synthon approach’ (Corey & Cheng 1989), which for each molecular structure allows devising a possible synthetic route, called retrosynthesis, have put KTM on a formal basis. Since then, molecular structure is not only inferred from making the compound, but also, inversely, the chemical synthesis of a compound is inferred from its molecular structure.

4.4 Structural analysis by inference from synthetic variations

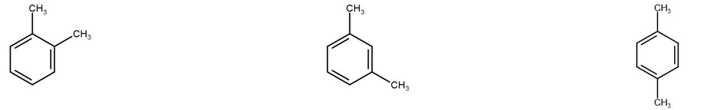

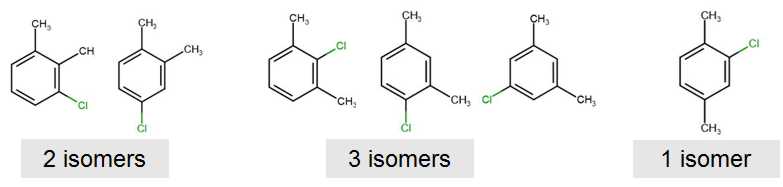

Besides structure elucidation by synthesis or analysis of the compound in question, there has been a third approach in chemistry that employs KTM, which is still important today in chemical kinetics for determining transient and intermediary molecular states that are inaccessible by spectroscopic methods. The approach, which had indirectly been used before, became firmly established by the German-Italian chemist Wilhelm Körner in his 1874 work to distinguish between aromatic isomers (Brock 1992, p. 267). Assume your substance can have, according to all available knowledge, either of the three molecular structures of Figure 2, first row, called ortho-, meta-, and para-dimethylbenzene; or o-, m-, and p-xylene. How can one distinguish between these three isomers that all have similar chemical properties, the same elemental composition, and very similar constitutions?

Figure 2. Ortho-, meta-, and para-dimethylbenzene (first row, from left to right) and all their possible products of mono-chlorination (second row) (adapted from Brock 1992, p. 267).

The KTM approach by Körner applies defined synthetic variations, here chlorination by adding the reagent chlorine that substitutes for one hydrogen atom. Based on simple combinatorics, there are exactly six different possible monochloro-xylenes (Figure 2, second row): p-dimethylbenzene has only one, o-dimethylbenzene has two, and m-dimethylbenzene has three monochloro-substitutes. Thus, to answer the question, one needs to add chlorine to the compound, let them react, and count the number of the resultant monochloro-xylenes in the product, whether it has only one (para-), two (ortho-) or three (meta-) isomers of dimethylbenzene).

Thanks to mathematical chemistry, simple (paper-and-pencil) combinatorics has long been replaced by group theoretical considerations that allow calculating the number of possible isomers for each elemental composition, at least for simple elements with fixed valencies. That kind of mathematical approach combined with synthetic modification and analytic counting of actual products, alien as it still is to the received philosophy of science, established an entirely new way of scientific knowledge building, because it connected KTM with mathematics (here group theory), or more generally, a priori reasoning.

5. Knowing Through Making in Biology

5.1. Historical background

For most of the recorded history, in fact from antiquity up to the mid-19th century, and in all known cultures, the creation of life was considered commonplace (Schummer 2011, chap. 3). Let some food rot for a while, and anything from mold to worms will emerge soon. Spontaneous generation (and thus intentional creation) of life was not only part of everyday experience, also many natural philosophers and sacred texts, including the Bible, mentioned it. For instance, Aristotle in the fourth century BC provided a quasi-chemical explanation of how and when life emerges out of inanimate matter; Vitruvius, in the first century BC, gave detailed advice on how to avoid it in housing; whereas Virgil, in the first century AD, revealed a recipe of producing bees from rotting meat to make a living from honey (ibid.).

From ancient Greek mechanical automata and ancient Indian ideas of growing an army of human embryos in vessels, to Arabic alchemical fantasies of homunculi and Jewish kabbalistic legends of Golems, ideas about the artificial making of human-like beings flourished in various cultures, and greatly inspired later Western writers and playwrights (Schummer, chap. 4). In societies that believe in a creator god these stories have been particularly fascinating because they relate the creative abilities of humans to that of their god. In the Jewish-Christian tradition, where, on the one hand, god is said to have created humans in his likeness and, on the other hand, assuming the attributes of god is the greatest sin, the reason for fascination (and severe conflicts) is built-in, so to speak (ibid., chap. 15). In the Jewish Golem tradition, the spiritual ambition to create a human-like Golem was to imitate, and thereby to understand better, the creator god, i.e. an epistemic goal, a form of KTM, similar to the alchemical idea quoted above. As long as the Golem was thumb and in any regard clearly inferior to humans, the human creator did not compete with the creator god and thus did no wrong (ibid.).

Before the acceptance of biological evolution theory, the generation of human beings was not related to spontaneous generation or human creation of simple living beings. As Darwinian ideas gained public acceptance in the late 19th or early 20th century, depending on whom and which country one counts, people realized that humans could possibly derive indirectly from spontaneous generation or even from the human creation of simple living beings without divine influence. That caused not only a creationist counter-movement, particularly in the US where it is still influential, which denies both evolution and the age-old beliefs in spontaneous generation and relates each and every organism back to the primeval creation (ibid.). It also connected for the first time the human creation of simple life to that of real humans. Since then the smallest research detail that could possibly be related to the creation of life has received the outmost media attention, in contrast to the millennia before when it was just taken for granted (ibid., chap. 5).

During the 20th century, claims on the creation of life frequently hit the headlines, from chemically induced parthenogenesis of sea urchin by Jacques Loeb in 1905, to in-vitro fertilization, to numerous achievements of genetic engineering (ibid., chaps. 6-7). In addition, the term ‘synthetic biology’, or some variation of it in other languages, was soon used to denote various ideas, including biological experimentation (Leduc 1912) and the controlled chemical manipulation of biological organisms (Fischer 1915). James Danielli, a chemist-turned biologist who in 1970 claimed to have created the first unicellular organism (by assembling the membrane, cytoplasm, and nucleus of three different amoebae into one new amoeba), coined the term ‘synthetic biology’ similar to its current meaning, including its various technological promises (Schummer 2011, chaps. 6-7). Referring to the 19th-century move from analytical to synthetic chemistry, Danielli (1974) argued that biology had made a similar move, from analytic to synthetic biology, in the 1960s .

Before we discuss the recent ambitions to create life from scratch as a way of hard KTM, it is useful to look first at the forms of soft KTM in biology.

5.2 Knowing through manipulating in biology

Throughout the history of modern physiology the study of biological functions of parts of an organism has been conducted by investigating the effects of intentional or accidental modifications. For instance, the human pathology of injuries has been a rich source for understanding biological function, or lack thereof through damages, from entire organs to brain regions. In classical plant and animal physiology the approach became standard experimental method: when damaging or removing a structural part of a living organism results in the loss of a certain function, there is strong evidence that the structural part is (co-)responsible for performing that function. Evidence increases if recovery of the structure restores the function.

The modern science of molecular genetics, as it developed since the 1960s, rests on exactly the same approach, called gene knockout. When the removal (or deactivation) of a certain DNA sequence of an organism results in the loss of a function (usually the loss of a protein that performs a certain function), the DNA sequence is considered to be a gene that (co-)encodes that function. And in reverse, if the insertion of that DNA sequence into a genome results in activating the particular function of the organism, there is strong evidence that the sequence encodes exactly that function. What appears to be just a technological toolbox, became one of the most powerful epistemic tools of the 20th century that turned much of biology upside down (ibid., chap. 8). Since genetic manipulation allowed to attribute functions at the genomic level, the genotype rather than the phenotype, became the preferred object of studies in many pivotal fields of biology, including taxonomy and evolution theory.

In the 1990s, metabolic engineering went one step further than classical genetic engineering by not only ‘knocking out and in’ particular DNA sequences (or genes) that encode particular proteins, but by identifying and transferring systems of sequences that together encode for more complex biological functions or organs, like bioluminiscence or chemical sensors. In the most recent version of ‘Synthetic Biology’, that approach has been reframed in terms of electric engineering or computer science, e.g. as in ‘logical or regulatory circuits’.

Also the very origins of molecular genetics, the ‘deciphering of the genetic code’ rests on KTM. In 1961, the two biochemists Marshall W. Nirenberg and J. Heinrich Matthaei began feeding inanimate bacterial cytoplasm with chemically synthesized polynucleotides, snippets of RNA, and a set of amino acids with radioactive markers. When they found that the protoplasm/ribosoms newly produced (later called, ‘translated’) poly-phenylalanine protein from poly-uracyl RNA, they varied the RNA sequences. From the variation pattern of resultant polypeptides (small proteins), they could eventually deduce which sequence of three nucleotides resulted in the bio-synthesis of which amino acid, i.e. the genetic code (Rheinberger 1997).

The examples above are cases of soft KTM in biology, which differ from hard KTM because they do not imply ontological change. However there are bordering cases. One is the ‘deciphering of the genetic code’ because it literally created a variation of polypetides by a variation of polynucleotides, which are chemically and biochemically different species. But that change is based on chemical/biochemical ontology rather an on the distinction between biological species. The long traditions of cross-breeding, plant grafting, and other forms of hybridization are another group of bordering case. Breeding can help develop new biological knowledge, as for instance in Mendel’s classical mid-19th-century experiments or in early evolution theory, but does not involve a change on the biological species level, and thus is knowing through manipulation. The numerous techniques of making hybrids, i.e. intermediary or mixed species by cross-breeding, grafting, or the exchange of nuclei, do create something new, but their uses have mostly been of technological value in agriculture and gardening. There is vast empirical knowledge about the possibilities and limits of forming hybrids for cultivation, but theoretical understanding of hybridization is still underdeveloped.

Our distinction between modification and making, or between soft and hard KTM, is based on the biological species concept, which may not be appropriate here. Indeed, biology has inherited that pre-evolutionary species concept from natural theology, originally developed by John Ray and Carl Linnaeus in the 17th and 18th centuries and based on the notion of an undisrupted lineage by sexual reproduction back to divine creation. Many biologists avoid the concept nowadays and speak of ‘populations’ instead, others refer only to the ability of sexual reproduction. However, the species concept has never been radically and consensually revised so as to fit the entire realm of life, including for instance bacteria that do not fit the human model of sexual reproduction. Once such a revision will be made, the examples discussed above might also count as cases of hard KTM.

5.3 Creating life from scratch: Protocell research and synthetic genomics

One way to avoid the biological species issue is by creating life from inanimate matter. At the turn to the 21st century, two approaches made particularly strong claims to hard KTM in biology (Schummer 2011, chap 8). As DNA sequence analysis and synthesis became cheaper, faster, and more accurate, and as the tool box of genetic/metabolic engineering became more sophisticated and reframed in terms of functional building blocks and regulatory networks, synthetic genomics promised to gain better understanding of life by making an entirely new synthetic organism through the design and synthesis of its entire genome. In contrast to this genome-focused approach, protocell research aims at creating a cell-like structure that shows basic features of a living cell. While they gave up the earlier goal of understanding the chemical origin of the earliest life forms (called ‘chemical evolution’), they promised that the making of any kind of protocells would enhance our understanding of life (e.g. Mann 2013).

Both synthetic genomics and protocell research would meet the formal requirement of making something new, a living organism from inanimate matter, once they are successful. The epistemological question is if they can possibly provide new biological knowledge on organisms based on KTM as an epistemological principle. Of course, every kind of experimental tinkering reveals some insight into the particulars of the object of study, and there would certainly be something new to learn from an artificially made organism.[7] However, do we automatically learn something new about an organism by making it in the laboratory in a similarly systematic way as, for instance, 19th-century chemistry elucidated the molecular structure of compounds by each synthesizing it in small, controlled steps?

Let us first exclude trivial forms of KTM (s.a.). The successful creation of a living organism would provide a protocol of how to make it (trivial know-how), provided the experiment is reproducible. Such trivial know-how, while important for experimental practice and possible technologies, does not provide any extra-knowledge other than how to make it. Second, the successful creation would prove that humans can do it (trivial know-that). Much more so than in 19th-century chemistry, trivial know-that in biology is of metaphysical and religious importance. Synthesizing life from inanimate matter could eradicate vitalism once and for ever – at least in its most naive form. It would also undermine Christian creationism that evangelicals have cultivated in the US and spread worldwide through missionaries and TV programs. Although that might count as important social or metaphysical contributions, trivial know-how and know-that do not contribute to science proper.

What kind of biological insight could we gain instead? Would life synthesis help us to develop a better understanding of the biological concept of life, as many have claimed? Strangely enough, life is a rarely defined concept in biology, as if biologists either take it for granted or do not care about it. Attempts at defining the concept usually list properties such as self-organization and homoeostasis, metabolism, and reproduction, sometimes also the ability of evolutionary development and a material basis of DNA/RNA and/or proteins – to distinguish real life from so-called ‘artificial life’, which is a term for software that simulates biological processes. Definitions of life are expected to distinguish biological organisms from other things and processes that meet one or more of the criteria, such as a soap bubble (self-organization, homoeostasis), a candle flame (self-organization, homoeostasis, and metabolism), crystal growth (self-replication), mineralization under changing environmental conditions (evolutionary development), viruses (RNA and protein-based), and so on. If life is taken as a scientific concept, rather than a religious mystery or the personal biography of a human, its definition is a matter of dispute and conflicting interest among scientists, which cannot be solved by experiments. To the contrary, those who aspire to create life would probably fight for a minimalist definition such that their goal could be achieved easier – the software engineers who propagated ‘artificial life’ in the late 1980s are a telling example. Hence, one should not expect concept clarifications from partisan fighting for radical definitions, nor confuse definitions with scientific knowledge.

By giving up the epistemic goal of understanding the chemical origin of life, and instead aspiring to create any kind of system that meet some of the above-mentioned criteria and that can fulfill possible tasks, protocell research moved from science to possible technology, but even that remains unclear. Understanding the origin of life is a respectable scientific goal that contributes to the historical knowledge of the world we all live in, independently of any religious or metaphysical implications that this might have in a specific culture. By contrast, creating something that can fulfill possible tasks for economic purposes is as such not a scientific goal, because it does not contribute to scientific knowledge but to mere know-how. That might be one reason why some protocell researchers denigrate research of the historical origin of life as speculative, which would equally apply to anything from astrophysics, astrochemistry, and biological evolution to archaeology and historiography, while highlighting their own research as being ‘experimental’ in contrast (Mann 2013). However, from an epistemological point of view, it is unclear what their own actual epistemic goals or possible contributions to science are, besides trivial KTM, because chemists such as Stephen Mann articulate their views in a language that is largely alien to epistemology. Moreover, it still remains to be seen if the creation of an entirely new organism from scratch could possibly have, by technological and economic measures such as cost-benefit analysis, any advantage over the genetic modification of existing bacterial or yeast forms.

Synthetic genomics, on the other hand, seems to have shifted from genetic engineering to a core field of scientific biology that first of all aims at a thorough understanding of life on the genome level, before designing other life forms at will. The search for a minimal genome that is just sufficient to serve basic life functions goes either by eliminating parts from an existing genome (‘top-down’) or by designing the minimal genome from scratch (‘bottom-up’). The top-down approach is an extended version of knowing through modification discussed above. It could be blindly performed by trial and error, i.e. by varying knockout steps as long as a minimum genome is found that, once inserted into a cell, can sustain basic life functions of a certain hybrid. Or the knockout steps are guided by genetic knowledge, such that all parts of the genome that appear to be obsolete are cut off, which would be equivalent to the bottom-up approach.

Let us assume the sophisticated approach would be successful one day by producing something that would meet some definition of living beings for a while: what knowledge would we gain other than trivial forms of KTM, i.e. that and how we can make a certain hybrid from a minimal genome? Ideally perhaps one could relate individual genome sequences to basic life functions. However, synthetic genomics would face the same definition problem as protocell research: it is not clear, and a matter of debate between stakeholders, what exactly the minimal basic life functions are that the minimal genome should serve. Thus, the result would likely stir new disputes on the biological concept of life rather than just provide new evidence-based knowledge.

5.4 Proving the hidden assumptions of synthetic genomics by creating life

Synthetic genomics seems to be based on several assumptions that are contentious. Hence, the possible success of synthetic genomics could prove the correctness of these assumptions, which would indeed be an important case of KTM.

The first assumption is the idea that genomes can simply be divided up into functional building blocks that work independent from each other and from the particular cell environment (‘genome modularity’). While many decades of molecular genetics have shown that this is not that easy, computer scientists who recently embarked on synthetic biology are quite optimistic that their own approach to genome modularity would be successful.

The second assumption is genome-essentialism or -centrism, which takes the genome as a whole and its functional parts to be the essence of life. In that view, the creation of a living organism can be equated with synthesizing its genome, or just with designing its sequence on the computer. Thus, for instance, for synthetic biologists a ‘Biobrick’ is not a functional unit of a living organism, like an organ, but a piece of DNA sequence information that encodes the proteins that together perform that function. If genome-essentialism were right, we could in fact ignore that the genome still has to be made in the laboratory and inserted into a host cell to form a living organism, and that the result would be a hybrid rather than artificial life from scratch, and instead believe that the severe limitations to hybridization known since many decades in microbiology are meaningless. Synthetic biologists are again optimistic that the obstacles can easily be overcome, sometimes relying on a computer metaphor: the software (synthetic genome) reboots the hardware (host cell) (e.g. Gibson et al. 2010). Again, only experimental success might be able prove genome-essentialism.

The third, and perhaps most important, assumption, which supports the second one, is gene determinism, according to which DNA/RNA determine proteins, but never vice versa. (This is related to but not the same as the so-called the ‘Central Dogma of molecular biology’, according to which sequence information in biomolecules flows unidirectional from DNA/RNA to proteins, first stated by British physicist Francis Crick as early as 1957.) If proteins were fully determined by genes, they would be of secondary importance; all one needs to know is the genome and how it determines proteins. However, from the point of view of chemistry, were all the molecules of a system mutually interact with each other and where strict irreversibility is impossible because of thermodynamics, gene determinism appears implausible – which might be one reason why the original principle was framed in terms of ‘information flow’ rather than of scientific causality.

Indeed since the mid-20th century, a myriad of biochemical impacts of enzymes (i.e. proteins) on DNA/RNA have been discovered: from DNA cleavage to DNA repair mechanism involving endonucleases, to retrovirus’ DNA generation by reverse transcriptase (another enzyme); from the transcription of a gene to RNA by RNA-transcriptase, to the regulation of gene expression by a variety of enzymes, including DNA methyltransferases, which determine which part of a DNA/RNA sequence is at what time expressed into proteins. The latter example has led to the growing field of epigenetics that studies the inheritable alteration of DNA by enzymes as a response to environmental conditions. The results of epigenetics thus increasingly undermine not only gene determinism but also classical Darwinism, according to which environmental adaption cannot be inherited to offspring. Defenders of gene determinism argue that all proteins in an organisms, however they might act on DNA/RNA, are ultimately derived from DNA/RNA, and that the actual sequence of inherited DNA/RNA remains unaltered by proteins whatever methylation patter they have.

Meanwhile synthetic biology, as an endeavor to create new biological functions transferrable between organisms, has increasingly incorporated epigenetic results in its genome modularity approach in terms of ‘gene regulatory networks’. However, it is not so clear how this has been translated into the more ambitious goal of creating an entire organism from scratch by just synthesizing a genome without the corresponding proteins, the cell membrane, and the myriad of other non-protein substances of protoplasm. If the minimal genome approach will one day be successful in producing a system with minimal life functions, it could prove the correctness of the assumptions of genome modularity, genome essentialism, and gene determinism, which would be a landmark in the entire history of biological KTM. However, one would have to look very closely at the hidden experimental preconditions that possibly smuggle in the non-genome parts from host organisms that might be essential for the experimental results and which would again undermine the assumptions.

6. Conclusion

Up to the late 18th century, the description of the natural world as it is, or better: as it appears to a distant observer or impartial witness, belonged to natural history; if the description was cast in quantitative terms, it belonged to mathematics. By contrast, natural philosophy (science) aimed at understanding natural changes by reference to the natural causes of change.

If we apply that historical distinction to the presence, most of our experimental sciences seem to try to understand changes and their causes by varying the contextual conditions of their experiments, i.e. they study changeabilities. There is no way to experimentally study changeablities other than by trying out to actually perform changes. This is most obvious in chemistry, because all chemical properties are reactivities or chemical changeabilies.

Once the idea of a static biological world was abolished, also biology emerged as a science of changeabilities, with genetics and evolution theory at its core. (Even today’s effort at recording the world’s biodiversity aims at its variability and vulnerability to human impacts.) In any experimental science the study of changeabilities necessarily involves human manipulation and change, which departs from the spectator theory of science. That is when and why KTM became a guiding epistemological principle also in biology, and when the traditional philosophy of science became obsolete, like in chemistry.

While KTM, in its hard version, is logically built into chemistry and thus unavoidable (strong and hard KTM), biology seems to be still confined to soft and weak KTM. However, that evaluation is based on its pre-evolutionary and pre-modern species concept, according to which we would have to consider most experiments of change as mere modification rather than as the making of something new. On the other hand, the religious connotation of life-making has created such a buzz, so that, as public funding can be increased by public excitement, the slightest modification of an organism has been called ‘creation of life’ to be echoed by media responses of ‘playing God’. A poorly developed ontology thus easily contributes to confusion in ontological, epistemological, and moral matters.

An improved ontology would certainly help develop KTM epistemology in biology further. Once the epistemic goals are more clearly defined and distinguished from the alleged technological goals, the epistemological method can better be laid out. Only then can we scrutinize in more detail the KTM claims of protocell research and synthetic genomics, and distinguish each between being trivial and non-trivial, soft and hard, and strong and weak KTM.

There is also a rich history of KTM in experimental physics, which is beyond the scope of this paper, however. Suffice it to point to the common historical roots of nuclear chemistry and nuclear physics, as in the discovery of nuclear fission in 1938 by chemists Otto Hahn and Fritz Strassmann, and of the search for new ‘effects’, such as the Faraday effect, Seebeck effect, or chemo-luminescence, where entirely new properties emerge, which might better be understood from the point of view of process ontology (Schummer forthcoming).

KTM differs from the spectator view in that something new is made in the material world, even if the creation is not the main purpose. That not only lends itself to technological exploitation, it also requires ethical consideration of the science itself that literarily changes the world with possible adverse effects starting right in the laboratory. Whereas the philosophers of armchair science might be content with logical reasoning, the philosophers of sciences that realize KTM at work cannot afford such a narrow focus. They need to integrate epistemological, ontological, and ethical reasoning. Modern chemistry, biology, and nuclear physics are telling examples of that need of an integrated philosophy of science. Their epistemic success are testimonies not only to the inappropriateness of the spectator view, but also to its deeply flawed assumption of neglecting ethical and ontological considerations (for chemistry see Schummer & Børsen 2021).

Notes

[1] Earlier versions of this paper have been presented at various times and locations over the previous ten years, first as ‘From Synthetic Chemistry to Synthetic Biology: The Revival of the Verum Factum Principle’, as an invited keynote address of the Symposium of the Joint Commission of IUHPS, 14th Congress of Logic, Methodology and Philosophy of Science, Nancy, France, 19-26 July 2011.

[2] For a detailed discussion of this and the following, see Blumenberg 1973, pp. 72ff.

[3] While Vico’s original approach (Vico 1710) aspired to have universal validity in all areas of knowledge, his announced applications to natural philosophy seems to be lost, and that to ethics left unwritten.

[4] That was already noticed by the contemporary philosopher Friedrich Heinrich Jacobi in his Von den göttlichen Dingen und ihrer Offenbarung (1811, p. 122f), see also Hösle 2016.

[5] To be more correct, the statement was posthumously found on the blackboard of Feynman’s office at CalTech (see https://archives.caltech.edu/pictures/1.10-29.jpg), which does not strictly prove that he actually supported it.

[6] "Quod magisterium imitetur creationem universi" (‘The (alchemical) mastery should imitate the creation of the universe’, quoted from Ruska 1926, p. 185).

[7] A classic example from synthetic genomics is the synthesis of the pathogenic virus genomes, after the group of Eckard Wimmer first synthesized the poliovirus genome in 2002. Such results may help improve our understanding of virus evolution, pandemics, and vaccines, but also of producing biological weapons.

7. References

Bergmann, G.: 1954, ‘Sense and Nonsense in Operationism’, The Scientific Monthly, 59, 210-215.

Berthelot, M.: 1860, Chimie Organique fondée sur la Synthèse, Paris: Mallet-Bachelier.

Blumenberg, H.: 1973, Der Prozeß der theoretischen Neugierde, Frankfurt: Suhrkamp.

Brock, W.H.: 1992, The Fontana History of Chemistry, London: Fontana.

Corey, E.J.; Cheng, X.-M.: 1989, The Logic of Chemical Synthesis, New York: Wiley.

Danielli, J.F.: 1974, ‘Genetic Engineering and Life Synthesis’, International Review of Cytology, 38, 1-5.

Dewey, J.: 1929, The Quest for Certainty, London: Allen &Unwin.

Engels, F. : [1886] 1975, ‘Ludwig Feuerbach und der Ausgang der klassischen deutschen Philosophie’, in: Karl Marx & Friedrich Engels – Werke, Berlin: Dietz, vol. 21.5 [reproduced from 1st ed., Berlin-East, 1962. pp. 274-282; available online: http://www.mlwerke.de/me/me21/ me21_274.htm; English: https://www.marxists.org/archive/marx/works/1886/ludwig-feuerbach/index.htm, accessed 27 Dec. 2019].

Fischer, E. [1915] 1924: ‘Die Kaiser-Wilhelm-Institute und der Zusammenhang von organischer Chemie und Biologie’, in: E. Fischer: Gesammelte Werke: Untersuchungen aus verschiedenen Gebieten: Vorträge und Abhandlungen allgemeinen Inhalts, ed. M. Bergmann, Berlin: J. Springer, pp. 796-809.

Gibson, D.G. et al.: 2010, ‘Creation of a Bacterial Cell Controlled by a Chemically Synthesized Genome’, Science, 329, 52-56.

Hacking, I.: 1983: Representing and Intervening: Introductory Topics in the Philosophy of Natural Science, Cambridge: Cambridge University Press.

Hösle, V.: 2016, Vico’s New Science of the Intersubjective World, Notre Dame: University of Notre Dame Pess.

Kant, I: 1787, Kritik der reinen Vernunft, Riga.

Latour, B.: 1987, Science in Action: How to Follow Scientists and Engineers Through Society, Harvard: Harvard University Press.

Leduc, S.: 1912, La Biologie synthétique, Paris: A. Poinat.

Mann, S.: 2013, ‘The Origins of Life: Old Problems, New Chemistries’, Angewandte Chemie International Edition, 52(1), 155-162.

Morris, P.J.T. (ed.): 2002, From Classical To Modern Chemistry: The Instrumental Revolution, London: Royal Society for Chemistry.

Philo of Alexandria: On the Migration of Abraham [available online at: http://www.earlyjewishwritings.com/text/philo/book16.html, accessed 12 Dec. 2019].

Ruska, J.: 1926, Tabula Smaragdina: Ein Beitrag zur Geschichte der hermetischen Literatur, Heidelberg: Winter.

Rheinberger, H.-J.: 1997. Towards a History of Epistemic Things: Synthesizing Proteins in the Test Tube, Stanford UP, Palo Alto.

Schummer 1994: ‘Die Rolle des Experiments in der Chemie’, in: P. Janich (ed.): Philosophische Perspektiven der Chemie, Mannheim: Bibliographisches Institut, pp. 27-51.

Schummer, J.: 1997a, ‘Scientometric Studies on Chemistry, I: The Exponential Growth of Chemical Substances, 1800-1995’, Scientometrics, 39, 107-123.

Schummer, J.: 1997b, ‘Challenging Standard Distinctions between Science and Technology: The Case of Preparative Chemistry’, Hyle: International Journal for Philosophy of Chemistry, 3, 81-94.

Schummer, J.: 1998, ‘The Chemical Core of Chemistry, I: A Conceptual Approach’, Hyle: International Journal for Philosophy of Chemistry, 4, 129-162.

Schummer, J.: 2002, ‘The Impact of Instrumentation on Chemical Species Identity’, in: P.J.T. Morris (ed.): From Classical To Modern Chemistry: The Instrumental Revolution, London: Royal Society for Chemistry, pp. 188-211.

Schummer, J.: 2003, ‘The Notion of Nature in Chemistry’, Studies in History of Philosophy of Science A, 34, 705-736.

Schummer, J.: 2009, ‘The Creation of Life in Cultural Context: From Spontaneous Generation to Synthetic Biology’, in: M. Bedau & E. Parke (eds.): The Ethics of Protocells: Moral and Social Implications of Creating Life in the Laboratory, Cambridge, MA: MIT-Press, pp. 125-142.

Schummer, J.: 2011, Das Gotteshandwerk: Die künstliche Herstellung von Leben im Labor, Berlin: Suhrkamp.

Schummer, J.: 2015, ‘The Methodological Pluralism of Chemistry and Its Philosophical Implications’, in: E.R. Scerri & L. McIntyre (eds.): Philosophy of Chemistry: Review of a Current Discipline, Dordrecht: Springer, pp. 57-72.

Schummer, J.: 2016, ‘"Are You Playing God?": Synthetic Biology and the Chemical Ambition to Create Artificial Life’, Hyle: International Journal for Philosophy of Chemistry, 22, 149-172.

Schummer, J.: forthcoming, ‘Material Emergence in Chemistry: How Science Explores the Unknown by the Coevolution of Explorative Experimentation and Pluralistic Model-Building’, in: J.-P. Llored (ed.), Philosophizing from and with Chemistry (Synthese Library - Studies in Epistemology, Logic, Methodology, and Philosophy of Science), Springer.

Schummer J. & Børsen, T. (eds.): 2021: Ethics of Chemistry: From Poison Gas to Climate Engineering, Singapore et al.: World Scientific Publishing.

Vico, G., 2010: On the Most Ancient Wisdom of the Italians: Drawn out from the Origins of the Latin Language, trans. by J. Taylor, New Haven, CT: Yale University Press.

Joachim Schummer:

Berlin, Germany & La Palma, Spain;

js@hyle.org

Copyright © 2021 by HYLE and Joachim Schummer

|