http://www.hyle.org

Copyright © 2020 by HYLE and Joachim Schummer

The Chemical Prediction of Stratospheric Ozone Depletion:

|

| O2 + UV → 2 O | (1) | |

| O + O2 → O3 | (2) | |

| O + O3 → 2 O2 | (3) | |

| O3 + UV (+ Cat) → O2 + O | (4) |

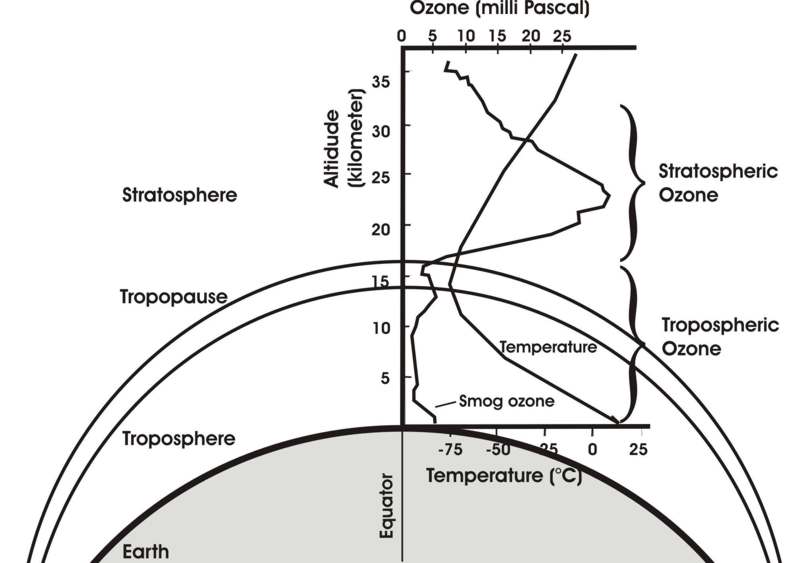

The continuous UV-absorbing formation and decay of O3, according to Reactions 1-4, known as the ozone-oxygen or Chapman cycle since 1930, produces heat and forms an ozone-oxygen equilibrium that depends on concentrations, temperature, and UV radiation, shaping the stratospheric ozone profile (Figure 1). Because the upper region of the atmosphere is more exposed to UV and shields the lower region by UV absorption, the heat producing Chapman cycle becomes less active as we move downwards. Therefore the temperature decreases from upper to lower levels, in the opposite direction compared to the Earth’s surface region, the troposphere, where the temperature gradient is governed by the infrared radiation from the Earth. As a result we have a temperature minimum at the level where the Chapman cycle becomes almost inactive, which is called the tropopause, where troposphere and stratosphere meet. On the upper side there is a temperature maximum (the stratopause) because gas concentrations decrease with height, making chemical reactions less likely. The stratosphere is thus that region of the atmosphere where the heat-producing and UV-absorbing Chapman cycle is active, governed by the formation and decay of ozone that protect life from dangerous UV radiation and letting through only some of the much less dangerous UV-A part (λ>315nm). It is a fragile structure because the ozone-oxygen equilibrium also depends on the concentrations of various compounds that catalyze only the decay of ozone (Reaction 4) but not its formation, as we will see later.

The troposphere and stratosphere are not only distinguished by their opposite temperature gradients and different chemical compositions, also the dynamics of their air masses greatly differ from one another. What we call weather – the vaporization of surface water and the condensation of vapor in the form of rain, the buoyancy of warmed air masses on the surfaces, and the resultant temperature and pressure differences that cause the winds – is largely confined to the troposphere. In contrast, the stratosphere is rather calm and protected against tropospheric dynamics by the inversion of the temperature gradient. Heated air masses that move up the tropospheric temperature gradient because of their lower density through buoyancy stop at the tropopause. In the stratosphere, gases usually move rather by diffusion than by bulk movement, such that the exchange between the two spheres is very slow. However, the tropopause is not a fixed level but varies by local turbulences, daytime, seasons, and, to the largest degree, by latitude, overall at altitudes between 6 and 18 km.

Figure 1: Temperature and ozone concentration in the lower atmosphere (adapted from Parson 2003).

The layering of the atmosphere continues as we move upwards. Like the stratosphere, which is formed by the Chapman cycle, the thermosphere (ca. 80-500 km) is built by UV absorption, but now of higher energy capable of ionizing various compounds resulting again in higher temperature at higher levels, but with ozone being almost absent. Between the stratosphere and the thermosphere is the somewhat ill-defined mesosphere where temperature decreases with height, like in the outermost ‘layer’, the exosphere, that extends towards the open space. All these layers are not fixed, as textbooks tend to portray them. They greatly vary by various parameters, and the only practicable way to distinguish them is by temperature gradient.

In geological times, the UV-protection shield was built up in steps. First, the thermosphere emerged (then extending to much lower levels) by absorption of the high-energy part of solar UV radiation (ca. 10-122 nm) and the ionization of most substances. Once sufficient amounts of oxygen could survive beneath the ionization radiation shield, it absorbed UV in the range up to around 200 nm wavelength by dissociation into O radicals, which allowed building the stratosphere by the formation of ozone, whose photodissociation absorbs UV up to almost 315 nm, called the Hartley Band or UV-B. Next to the high-energy part, UV-B is particular destructive to organisms, because it affects weaker chemical bonds typical of biomolecules. It is this UV-B that would increase by the emission of chlorofluorocarbons.

2.2 Chlorofluorocarbons

Before we discuss how chlorofluorocarbons (CFCs) were predicted to affect the ozone layer, it is useful to look first at their earlier history. CFCs comprise a large set of compounds, basically hydrocarbons with all hydrogen atoms substituted by various combinations of chlorine and fluorine atoms.

The story of CFCs begins in 1891 when the Belgian chemist Frederic Swarts (1866-1940) tried to produce the first organic fluorine compound by mixing tetrachloromethane (CCl4) with antimony trifluoride (SbF3). At first he obtained trichlorofluoromethane (CCl3F), a colorless liquid that boils at 24° C, and then produced numerous other organic fluorine compounds by the same method (Kaufmann 1955). Swarts seemed to have no direct commercial interest as he did not file any patent; his research aimed at establishing a new substance class and exploring their physical and chemical properties. The strong inertness of the compounds did not suggest any commercial use to him.

That radically changed in the 1930s when a team of chemists at General Motors in the US, led by Thomas Midgley (1889-1944), turned Swarts’ synthesis into the large-scale industrial production of CCl3F, CCl2F2, and other chlorofluorocarbons that came to be known as freons under patent protection for a variety of uses. Their low boiling point, chemical inertness, stability, noncombustibility, and nontoxicity made CFCs ideal refrigerants. Earlier refrigerators, based on closed cycles of dimethyl ether, ammonia, sulfur dioxide, or methyl chloride, all carried severe risks of fire, explosion, or toxicity if the refrigerant leaked out the closed cycle by corrosion or accidents. Perhaps more than any other industrial chemical, CFCs changed people’s life style, particularly by the use of refrigerators and air-conditioning, and made living in extremely warm and humid climates comfortable. CFCs soon became also the standard propellants in ordinary sprays (colloquially called ‘aerosols’), such as in hair sprays, and replaced the earlier pump spray systems. The chemical industry widely used them as blowing agents for foam rubber and as solvents in chemical processes. Their chemical inertness also made them ideal fire extinguishing substances that would just disappear after their usage without causing any damage.

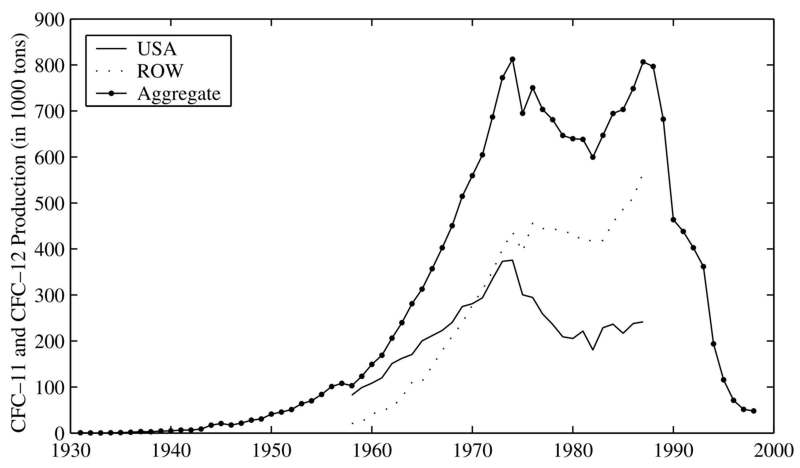

Based on voluntary reports by the world’s leading manufacturers, the commercial production of the two main CFCs (CCl3F and CCl2F2, known as CFC-11 and CFC-12) took off in the mid-1940s, with about 20,000 metric tons in 1945, and rose to more than 800,000 tons in 1974 (see Figure 2, AFEAS 1993, Aufhammer et al. 2005). After that it sharply declined in the US, and after about 1987 also in the rest of the world where there was still growth in the early 1980s. At the beginning CFCs were only produced in the US, but in the late 1950s, through licensing and then by fading local patent protection, production started in various other countries and outpaced US manufacturers around 1970.

Figure 2: The Production of CFC-11 and CFC-12 in the US and the rest of the world (ROV) (from Auffhammer et al. 2005).

The first knowledge of the atmospheric distribution of CFCs was produced by the independent British scientist James Lovelock (b. 1919), whom many know only from his Gaia hypothesis: the earth as a self-regulating system in which life, as we have seen in the last Section, shapes its own atmospheric environment. Trained as a chemist and physician, Lovelock became an entrepreneur and writer after he had revolutionized analytic chemistry with his invention of the electron capture detector (ECD) for gas chromatography. When a gas sample is injected into the top of a tube filled with a stationary and permeable material, such as a gel, and carried through the tube by a constant stream of an inert gas, e.g. N2 or helium, the compounds of the sample are emitted one after the other at the end of the tube at characteristic retention times, depending on their different interactions with the stationary material. Once the apparatus is calibrated with standard samples, you can qualitatively and quantitatively analyze gaseous or volatile mixtures, provided you have a suitable detector at the end of the tube. That technique, gas chromatography, was already developed in the mid-1940s, particularly by the German physical chemist Erica Cremer. However, Lovelock’s ECD made gas chromatography useful for environmental analysis, as it increased the sensitivity by several orders of magnitude up to a then incredible level of one part per trillion (ppt) (Morris 2002). The ECD operates with a radioactive element, such as 63Ni, that emits electrons to a positively charged anode, thus closing an open electric circuit and producing a steady electric current. Gas molecules, such as CFCs, that pass through the electron ray and can absorb electrons and are then detected by a temporary decrease of the current.

Lovelock, who had already found considerable CFCs concentrations in the air over Ireland, embarked on a ship towards Antarctica in 1971 to measure the latitudinal distribution of CFCs. His original concern was not environmental, as nobody imagined any threat from these inert compounds. Rather he was using CFCs as tracers to better understand tropospheric dynamics, particularly the exchange between the northern hemisphere (where CFCs had been manufactured and used) and the southern hemisphere (where they had not). His original research proposal at a British funding agency was rejected because the expert panel found it impossible that one could measure compounds at the ppt level, particularly not with an instrument that was built "on the kitchen table", as Lovelock loved to portray it (Lovelock 2000, pp. 206ff.). But it worked out, and the results showed tropospheric abundance all the way down to the Antartica, from which Lovelock concluded that CFCs lack a sink and accumulate in the atmosphere (Lovelock et al. 1973). In 1974 he also measured for the first time, and by the same technique, the vertical distribution of CFCs in the troposphere and lower part of the stratosphere by taking samples from a military aircraft (Lovelock 1974). This study was now undertaken from an environmental angle, since Rowland and Molina had published shortly before their groundbreaking research on the detrimental effects of CFCs on stratospheric ozone.

2.3 The prediction of CFCs’ stratospheric ozone depletion

During the 1960s and early 1970s, when the environmental movement started and raised public concerns about the adverse impacts of various technologies, the stability of the stratosphere became an matter of scientific investigations. Nuclear tests, rockets, and supersonic aircraft all intervened with the stratosphere. Moreover, the Chapman cycle, based on laboratory experiments of the kinetics of the individual reactions, predicted a higher ozone concentration than was actually calculated from the incoming UV radiation on the Earth’s surface. Laboratory experiments proved that various possible compounds could selectively catalyze the photodissociation of ozone (Reaction 4), thereby shifting the equilibrium. Thus, if by human activity these catalysts would move into the stratosphere, the ozone layer could easily be damaged.

The first candidate discussed was HO radical formed by photodissociation from the exhaust of water vapor of supersonic aircraft, which flew in the stratosphere. However it turned out that the impact was very low compared to other natural routes of HO formation. A second candidate, first suggested by Dutch atmospheric chemist Paul Crutzen (1970), was NO. It usually oxidizes in the troposphere to NO2, but it can also newly be formed in the stratosphere from the persistent nitrous oxide (N2O), or directly exhausted by supersonic aircraft (Johnston 1971). N2O is naturally produced by soil bacteria and thus is a natural source of ozone depletion, but its effect grows by the increased use of fertilizers (organic or not) in agriculture. The third candidate was the chlorine radical (Cl) of which both natural and human sources at first appeared of minor importance – particular concerns were about the proposed space shuttle exhaust of HCl.

All these scientific hypotheses received tremendous media attention around 1970, particularly in the US. While original concerns about damaging the ozone layer focused on the effect of climate change, fears grew even bigger when physiologists pointed out the effects on life on earth, including rising skin cancer rates of humans. Because of the novel kind of threat posed by humans messing with the stratosphere, numerous experts panels and committees were established, besides the regular meetings of the atmospheric science societies. The debates had a major impact on the formation of a new kind of governmental institution in the US in 1972, the Office of Technology Assessment (Kunkle 1995), later copied in many other countries. The agency should routinely scrutinize the possible adverse effects of new or emerging technologies and provide advice to government.

When the young Mexican chemist Mario J. Molina (b. 1943) joined the physical chemistry group of F. Sherwood Rowland (1927-2012) as postdoc at the University of California, Irvine, in the fall of 1973, Rowland had recently read Lovelock’s paper on the global abundance of CFCs. He suggested to his postdoc, among others, to research the photo-kinetics and life cycle of CFCs to see if they could be a source of stratospheric Cl causing ozone depletion, a project for which he had already obtained a grant. Both Molina and Rowland had no particular background in meteorology, they were physical chemists with a focus on kinetics. However, the work was quickly done in four months by literature research because all the required data had been published before. They just had to be composed into a newly developed atmospheric life cycle model of CFCs, predicting stratospheric ozone depletion by human activity, which the authors submitted to Nature in January 1974 (Molina & Rowland 1974) and for which they would share with Paul Crutzen the Nobel Prize of Chemistry in 1995.

Their model consisted of four theses that they quantified as far as possible. First, as Lovelock had argued before, CFCs distribute and accumulate in the troposphere because there is almost no sink (by photolysis, chemical reaction, or rainout) resulting in lifetimes of 40-150 years. Second, the only important sink is the slow diffusion to the stratosphere, where CFCs photodissociate by UV radiation (λ<220 nm), absent in the troposphere, to form free Cl atoms, e.g.:

| CCl2F2 + UV → CClF2 + Cl | (5) |

Third, among all possible stratospheric reactions of Cl the most likely and effective one is the depletion of ozone by formation of clorine monoxide (ClO):

| Cl + O3 → ClO + O2 | (6) |

Fourth, and most importantly, the most likely and effective reaction of ClO is with O (produced from the photodissociation of O2 according to Reaction 1) which again sets free Cl:

| ClO + O → Cl + O2 | (7) |

Hence, the net reaction of (6) and (7) is the depletion of ozone by O in which Cl is regenerated and thus formally acts like a catalyst:

| O3 + O + Cl → 2 O2 + Cl | (8) |

Because all known competing reactions to (6), which would consume Cl, are slow or reversible, the authors concluded that Cl, once set free in the stratosphere, would deplete ozone for years, at a five times higher rate than NO.[1] And because the amount of CFCs that had already been set free in the troposphere slowly diffuses into the stratosphere, we should expect ozone depletion for a "lengthy period" in the future, even if CFCs production would immediately stop today (ibid.).

Molina and Rowland, who elaborated on their model in a series of further papers, were initially not right in every detail. In particular, they predicted a faster and longer-lasting ozone depletion than was actually measured much later, because they had initially ignored an important sink (the reaction of Cl with N2O) and used inaccurate reaction rate constants from the literature (particularly for the formation of chlorine nitrate) which they later corrected themselves. Many other chemists helped develop the model further in various details, but the core of the model for predicting global stratosphere depletion has remained largely intact. Various theses were soon confirmed by atmospheric measurements that involved large research teams, including the tropospheric accumulation of CFCs and the presence of CFCs and ClO in the stratosphere. However, because of various difficulties (technical, financial, and natural[2]), it was not before 1988 that global stratospheric ozone depletion could be experimentally verified first by analysis of ground station data (WMO 1991, p. 4)[3] and then combined with satellite data (Stolarski et al. 1992). The results showed, for instance, for the northern mid-latitudes an average stratospheric ozone decrease of around 2% per decade in the period 1970-1991 with strong seasonal and regional variations.

In their original model, Molina and Rowland did not yet consider local and seasonal meteorological phenomena and knowingly excluded, for absence of scientific knowledge at the time, the "possible heterogeneous reactions of Cl atoms with particulate matter in the stratosphere" (Molina & Rowland 1974). That turned out to be crucial for what came to be known as the ‘Ozone Hole’, a temporary strong decrease, up to 50%, of stratospheric ozone in the Antarctic spring and more recently also in the Artic spring. The Antarctic Ozone Hole was first discovered by UV measurements from the British Antarctic ground station (Farman et al. 1985). It was only retrospectively confirmed for the previous years by the satellite based Total Ozone Mapping Spectrometer (TOMS), operated by NASA since 1978, who seemed to have mistaken the unusually low ozone values for measurement errors over several years, lacking a routine for pattern recognition in the huge amounts of data produced every year (Christie 2000, pp. 43-52; Conway 2008, p. 73).

Much more so than in the Arctic, a strong circular wind (the polar vortex) surrounds the Antarctic in winter that isolates the polar air masses from the temperate latitudes, resulting in very low temperatures and polar stratospheric clouds (PSCs) composed of solid water and nitric acid (HNO3). In the absence of light and UV for about 3 months, there are no photochemical reactions forming O and Cl, such that both the Chapman cycle and the Molina-Rowland cycle are frozen in winter. The exact chemical mechanism that leads to the polar Ozone Hole, to the understanding of which both Molina and Rowland considerably contributed, is very complex and perhaps not yet fully understood. In simple terms, stratospheric Cl and ClO is during the polar winter temporarily stored in various compounds (including hydrogen chloride (HCl) and chlorine nitrate (ClONO2)), which on the surface of stratospheric cloud particles react to form molecular chlorine (Cl2) and hypochlorous acid (HOCl). Once the sun rises in spring, Cl2 and HOCl are photolyzed to Cl and OH by visual light. ClO can set free further atomic Cl (via dimerization to chlorine oxide dimer (ClOOCl) and photolysis to 2 Cl and O2). Under these conditions ozone depletion occurs again by equation (6) of the Molina/Rowland model, while atomic chlorine is now recycled from ClO not by equation (7) but via

| 2 ClO → ClOOCl → 2 Cl +O2 | (9) |

The crucial point is that in the first weeks of the polar spring, there is already enough visible light for these photodissociations that cause ozone depletion, whereas UV is still too scarce for the photodissociation of O2 required for ozone formation after the Chapman cycle (Reaction 1). Note that at the small angle of solar radiation in the polar spring, when the sun hardly moves above the horizon, all light towards the polar region enters the atmosphere at much higher latitudes and passes a wide cross-section of the stratosphere. Under these conditions, UV is almost completely absorbed while visual light is only scattered to some degree but reaches the polar region.

In addition to CFCs, there are other ozone depleting substances (ODS). In particular, brominated organic compounds, which were widely used in fire extinguishers, set free bromine radicals in the stratosphere that react in a similar way as Cl. All ODSs came to be regulated and eventually banned by a series of international conventions.

2.4 Political consequences

Molina and Rowland did not only publish scientific papers of their findings, they also talked to fellow scientists, both chemists and meteorologists, at a time when the barrier between both disciplines was still very large. Moreover, after a presentation at an American Chemical Society meeting in September 1974, Rowland gave a press conference that led to a series of alarming articles and reports in national media. Numerous interviews with journalists would follow as well as political consultancy in the form of testimonies at congressional hearings and memberships of expert panels.

When scientific results have political implications, researchers can suddenly find themselves involved in public discourses that greatly differ from that of the scientific community. Emotions like fear and hope, personal rivalry, corporate interests, and indirect political interests might effectively shape the discourse. An important intermediary zone between science and the public are committees, commissions, or panels that are composed by a mixture of scientific expertise, established authorities, representation of special interest groups, and political orientation. In the case of the potential ozone depletion by CFCs, several such committees were soon launched in the US, but neither Molina nor Rowland were originally members of any of them because their case had to be assessed ‘independently’.

While an interagency task force (IMOS) and two committees by the National Academy of Science largely supported the Molina-Rowland model, as did several other scientists in their publications, and, directly or indirectly, called for legal action, others were more skeptical. The industries involved in the production and use of CFCs launched a Committee on Atmospheric Science to deny the ozone-depleting effect of CFCs, largely by denying all four theses of the Molina-Rowland model and by pointing to volcanos as natural sources of ozone depletion (Oreskes & Conway 2010, pp. 114f.). The debate in the US has frequently been called "The Ozone War", echoing Dotto & Schiff 1978, but that is, compared to other countries, perhaps an exaggeration because most of the counter-arguments were very poor by scientific standards and hardly heard. It is also fair to say that DuPont, the main US manufacturer of CFCs, repeatedly stated since 1974 that they would stop production once credible evidence was provided that CFCs had harmful effects (Parson 2003, p. 33). The intended debate on what counts as ‘credible evidence’ was certainly a measure to buy time before legal action would be taken (Smith 1998), but many US consumers had already refused to buy spray cans based on CFCs aerosols.

It took hardly more than four years from the first public media report on the Molina-Rowland model to the legal ban of ‘nonessential’ CFCs aerosols in sprays in the US in December 1978. Canada, Sweden, Denmark, and Norway followed soon, and some other countries required restrictions. Note that at that time stratospheric ozone depletion was still a scientific prediction, not yet confirmed by any measurement.

Two agencies of the United Nations (UN) took the initiative and brought the issue on the international agenda, the World Meteorological Organization (WMO), founded in 1950, and the United Nations Environment Programme (UNEP), established in 1972. In the mid-1970s they both began coordinating international research based on expert panels that defined research needs. Eventually in 1981, UNEP called for an international convention to protect the ozone layer, which would be negotiated for six years (Petsonk 1990). Strong objections to radical measures came from Japan and several West European countries, where manufacturing plants for CFCs had recently been built. As a first step, the Vienna Convention for the Protection of the Ozone Layer was signed on 22 March 1985, two months before the discovery of the Antarctic Ozone Hole was published[4] and years before global stratospheric ozone decrease was confirmed by measurements. The Vienna Convention established only a legal framework for future coordinated research and regulative activities to be defined by ‘protocols’. The first one, a true landmark in international environmental law, was the Montreal Protocol on Substances that Deplete the Ozone Layer signed in 1987, now under the impact of the Ozone Hole discovery. It provided a binding schedule for member countries to phase out the production of CFCs and other ODS, varying by the developmental status of the countries and the uses of ODS, the effect of which can be seen in Figure 2. Several amendments that established faster and stricter bans on ODS have followed since, such that by current estimates stratospheric ozone would recover by the end of the 21st century.

The Montreal Protocol is unique in several regards in the entire world history. First, it is the only international agreement ratified by all 196 UN member states, reaching a 100% consensus. Second, it is the first ever international agreement on collectively dealing with an environmental issue, which at the time of the signature was not even sure to affect all latitudes, and which had to be balanced against huge economic interests. The convention and the political process to establish it became a model for many later environmental efforts, particularly on climate change that had been discussed since the 1960s. However, third, the short timeline of 14 years, from the first scientific publication that raised awareness of the issue to international signature, will probably remain unparalleled for a long time. Forth, it is perhaps the strongest impact on international politics that a single chemical research project has ever had, competing only with the discovery of nuclear fission by the chemists Otto Hahn and Fritz Strassmann in 1938.

Although climate change, to which CFCs contribute, has absorbed much public attention and funding of atmospheric science, ozone depletion is still a focus of ongoing scientific research with the aim of preventing harm. For instance, in a recent paper by Stephen A. Montzka et al. (2018), the authors find tropospheric increase of CCl3F since 2012 originating from eastern Asia, which would be a clear violation of the Montreal Protocol.

3. Scientists’ Moral Responsibility of Hazard Foresight

3.1. Introduction

The historical case above invites ethical analysis. For instance, it provides the most prominent and historically most influential example of the precautionary principle (PP), before ethicists had discussed it. The PP requires that protective measures should be undertaken if there is considerable evidence, rather than decisive proof, for a hazard, and that the burden of proof is on the side of those who deny the hazard (see also Llored 2017). The international community of responsible politicians did exactly so in 1987, before global ozone depletion was empirically measured. Indeed, the mentioned US task force (IMOS) argued in that direction already in their 1975 report (Parson 2003, p. 35).

A second point for ethical analysis involves the moral responsibility of CFCs manufacturers before and after the Molina & Rowland paper of 1974. That would have to deal with the epistemological view by many academic chemists and physicists, according to which all one needs to know about a compound is its molecular structure. Because the molecular structures of CFCs are very simple and were all well-known by 1974, they created the illusion of perfect knowledge and safety. No chemist would by then imagine that the ozone depletion potential is a property of chemical compounds, which it is of course. The ethical analysis should thereby turn into an epistemological analysis of a dangerous misunderstanding about chemical knowledge, and the uselessness of risk analyses in chemical issues where we do not even know yet where to look for risks.

However, the ethical topic to be discussed in the following, for which there is perhaps no better case than the present one, is this: Do scientists have a special moral duty for researching and warning us of possible hazards? Is there a scientific foresight responsibility justified on ethical grounds? For a start, let us look at four questions.

First, was Molina and Rowland’s 1974 publication, in which they warned of stratospheric ozone depletion by CFCs, morally praiseworthy? Everyone threatened by DNA-damaging UV radiation would certainly agree, thereby also agreeing that, apart from the scientific quality, there is an ethical dimension of assessing research.

Second, should Molina and Rowland have been morally blamed if they had not undertaken their life cycle research of CFCs? Here I assume all scientists and most others would strongly disagree. Praise and blame are not symmetrical: we usually do not blame somebody if he has not done something praiseworthy; and we usually do not praise somebody if she has omitted to do something blameworthy, say, a robbery. Instead, we blame someone for failure of the morally expected, and we praise only for doing more than is morally expected. However, what is expected may change over time and depends on whether we look at it in the present or retrospectively.

Third, let us modify the last question further and counterfactually assume that other scientists, X&Y, first discovered the threat posed by CFCs much later: Should Molina and Rowland have been morally blamed if they had not undertaken their life cycle research of CFCs earlier, although they had all the required resources and capacities to do what X&Y actually did later? Many scientists, who tend to look at this as a case of research competition only, might disagree. But others would perhaps wonder about the inactivity, the lack of concern for public welfare.

Fourth, let us, more counterfactually assume, an inverted timeline. At first physicians noticed an increased rate of skin cancer, which scientists after a while attributed to higher UV-B radiation, the cause of which is after long research and debates found in the stratospheric ozone depletion by the emission of CFCs. The rapid spread of skin cancer expedited the political process of negotiating a worldwide ban on CFCs. Then people would ask: ‘Why didn’t scientists investigate the threat of CFCs before it was commercially produced and emitted?’ In that case I assume both the manufacturers and the scientists would be morally blamed by most people. Note that the inverted timeline has been the rule in the past for many cases, with notable exceptions such as the prediction by Molina and Rowland.

The three counterfactual examples illustrate the space of morally assessing the omission of foresight research, from being morally neutral to questionable and blameworthy. Before exploring the limits of foresight responsibility in Section 3.3, let us first look at its ethical justification.

3.2 Ethical justification of the scientists’ special duty to hazard foresight

In order to investigate if scientists bear special ethical responsibility for researching and warning us of possible hazards, let us first look at the moral duty to rescue and then discuss if the former is a special case of the latter.

The moral duty to rescue is nicely illustrated by an example by ethicist Peter Singer (1997): you notice a person has fallen into a lake and appears to drown. It would be easy for you to rescue the person at the expense of some dirty clothes and a short while of your time. Do you have a moral duty to do so? Most people would certainly agree; in dozens of countries the omission to help would even be a crime. Moreover, the duty to rescue can be justified by all major ethical theories that judge actions.

In utilitarianism, you ought to act so that you maximize happiness and reduce suffering overall. The little inconvenience of your dirty clothes counts close to nothing compared to the drowning of the person to rescue, such that rescuing is imperative here. In deontological ethics, which formulates general moral duties, the general duties of benevolence and to avoid harm are typically on top of the priority list and both imply the specific duty to rescue. Both the Golden Rule and the Kantian categorical imperative provide further justifications. If you were in the case of drowning in the lake, you would morally expect from anyone passing by to help you. And given the risk that you, like anyone else, could by sudden physical misfortune come into such a situation, you would reasonably want that the duty to rescue becomes a general moral obligation for anyone who is able to do so.

The ethical justification of the duty to rescue is based on three important conditions. First, you must be intellectually capable to foresee the risk, here, to distinguish between playful swimming and the danger of drowning. Second, you must be physically and intellectually capable to take useful measures for preventing the risk, here, being able to swim. Third, your own costs and risks of rescuing should be reasonable, here, for instance, nobody expects you to rescue somebody at the risk of your own life or serious damage to your health.

Taking the first two conditions into account, not everyone has the same moral duty to rescue. A child or disabled person who does not recognize the danger or is unable to swim can in retrospect not be held morally responsible for letting someone drown. On the other hand, people with special capacities to foresee a danger and take counteractive measures do have a special moral duty to act according to their capacities. Because scientists have particular intellectual capacities to foresee risks by knowledge and research, which no one else has, they bear a special duty to warn of possible risk that is justified on general ethical grounds. And in so far as they have particular intellectual and practical capacities for taking or inventing preventive measures against such risks, they also bear a special moral duty to do so.

The general moral duty to rescue thus depends on one’s own intellectual capacity with important implications for scientists to research and warn us of possible hazards. While the notion that scientific knowledge implies special responsibilities has frequently been claimed, it has rarely been justified by ethicists and explicitly acknowledged by scientists. Fortunately, however, it has implicitly been followed by many scientists, not the least by Molina and Rowland.

3.3 Limits of scientific foresight responsibility

Some chemists, in an effort to point out the importance of their discipline, tend to say that everything is chemistry. They are likely unaware of the ethical implications of that claim. If everything were chemistry, then every possible harm that happens in the world would have chemical causes and thus could potentially have been foreseen and perhaps even prevented by chemists. Chemists would then always have to be blamed for their omissions.

However, it follows from the ethical justification above that foresight responsibility is restricted to one’s actual, rather than pretended, intellectual capacity. That includes, but is not limited to, one’s areas of specialization and competence. To the intellectual capacity of scientists also belongs the ability to work themselves into new subject matters, even if that transcends their disciplinary boundary. Recall that Molina and Rowland were physical chemists, with little knowledge about meteorology at first, but could develop an atmospheric life cycle model within a few months. It further includes the ability to identify and contact specialists in different fields, which is how much of today’s interdisciplinary research is initiated. Thus, chemists who suspect a potential threat that they do not immediately understand, e.g. the uses of a combination of chemicals in a factory that might turn poisonous or explosive, are morally obliged to do some research on their own or contact fellow scientists with respective expertise.

A second limit of responsibility derives from the creativity of research. A potential hazard might not be noticeable by way of conventional thinking, but once you have spent some creative thinking on the issue – usually by looking at it from entirely different angles and questioning the received assumptions – it become obvious. Retrospectively it might even appear so obvious that people wonder why nobody had seen it before, as in the Molina-Rowland case, which can mislead moral assessments afterwards. The intellectual capacity of creative thinking greatly varies among people, including scientists. However, the profession of science consists in producing novel knowledge that no one has ever thought before, for which creativity is essential and expected. Thus, although nobody is expected to do Nobel Prize-winning research, scientists are morally obliged to use their capacities for creative thinking in identifying potential hazards.

Should scientists actively search for potential hazards or just research those cases where they intuitively suspect a threat? Imagine possible but undefinable hazards looming in a laboratory where researchers works and that you are responsible for their health. A responsible chemist would take all safety measures, including the employment of safety devices against unknown risks, and undertake research to identify potential threats. Because the active search for potential hazards belongs to the actual habit and capacity of experimental chemists, unlike for instance of theoretical physicists, it would be expected from them also in cases outside the lab.

However, there are several limits to the obligation of active research. First, research into potential hazards from chemical interactions is indefinite because one would have to investigate all possible chemical combinations under all possible conditions. Hence, it is inevitable to focus at first on cases where hazards of greater harm are suspected. Second, hazard research would consume all time, leaving no more room for other research, and it would lead to vast multiple research in parallel as long as the usual procedures of science are not established, the division of labor by subdiscipline-building and the documentation of research by publications. However, the division of labor creates new problems as we will see in the subsequent Section.

Finally, if chemists actually identify a threat, how far should they go to make their findings public? Of course a scientific publication, in order to receive the honor of being the first one who discovered the hazard, is not what is morally expected. The publication only serves to make the claim sound by scientific standards and communicates the issue to fellow scientists. One has to raise one’s voice louder and inform journalists, governmental agencies, and NGOs on the issue. However, much more so than in the times of Molina and Rowland, alarmism has become part of the standard rhetoric of science PR to attract or justify funding. Against the background of that noise, serious issues might remain unheard which is a severe fault of the science-society relationship. Scientists are morally obliged to raise their voices, but society, in particular science policy makers in cooperation with scientific societies, are responsible for keeping effective channels for serious warnings.

3.4 The dilemma of institutionalized Technology Assessment

In order to avoid parallel research and to professionalize scientific hazard foresight, the institutionalization of technology assessment (TA), either as governmental research agencies or as a scientific discipline, appears to be the ideal solution. Indeed, as was argued above, the awareness of novel technological threats to the stratosphere were crucial to the establishment of the US Office of Technology Assessment in 1972, which led to many TA offices worldwide. However, institutionalization has its downsides.

First, possible technological hazards rarely match the disciplinary division of science, as the Rowland-Molina case illustrates, which required a new combination of physical chemistry and meteorology. Although that helped establish the discipline of atmospheric chemistry, other possible hazards are clearly beyond that disciplinary scope. Any effective form of institutionalization would have to be composed of many different, ideally all, disciplines to allow for the fast setup of interdisciplinary project teams tailored to specific problems. However institutionalization is a social process that establishes fixed networks, clear divisions of responsibilities, and strict conventions that tend to assume a life of their own, making it the opposite of a flexible organization that can quickly respond to new challenges. That is also true of scientific disciplines that develop their own ways of identifying and dealing with problems, thereby tending to ignore unconventional approaches. It is even more true of governmental institutes with strict divisions of labor and hierarchical orders. Who would seriously expect Nobel-Prize-winning research, such as the Molina-Rowland prediction, from a governmental officer working in a hierarchical environment? Hence, if the hazard is truly unexpected and cannot be predicted by conventional methods, it would be better to rely in addition on the broad community of scientists that include individuals who dare to use unconventional methods.

The second difficulty, which is more important in the present context, is the erosion of moral responsibility by institutionalization. With any division of labor comes a division of occupational responsibilities, such that everyone has their clearly defined occupational or professional duties. With institutionalized TA, scientists might argue it is not their business to research and warn of possible hazards because other people are responsible for doing exactly that, such that they may exclusively focus on their specific research projects. However, the argument is based on the widespread confusion between occupational and moral responsibility. It is one thing to do what your employer or peer expects you to do, and quite another one to follow ethical guidelines, and sometimes both conflict with each other.

Recall Singer’s example above and assume that there is usually a lifeguard at the lake where the person is about to drown, but today the lifeguard is absent or unable to do his job for whatever reason. It is of course the occupational duty of the lifeguard to rescue drowning people, and he might even be sued for his failure. But that is no moral excuse for your own omission, instead it is your moral duty to rescue. Similarly, institutionalized Technology Assessment is no moral excuse for scientists to not care about possible hazards in the world that their intellectual capacity would possibly allow them to foresee.

Because of the first downside of institutionalization, its ineffectiveness, institutionalized TA was in all countries either abandoned or its original goal, scientific hazard foresight, was replaced by various social goals. These goals include awareness rising and consensus building on technological issues, mediation between stakeholders, and the implementation of societal needs and values in technological design. Thus, there is no more institutionalized scientific technology assessment, in the original sense, as the name misleadingly suggest, to which scientist might want to point as an excuse. However, even if that existed, scientists are morally obliged to research and warn of possible hazards, each according to their own intellectual capacities.

4. Conclusion

Most chemists, I assume, are guided in their research by some moral ideas of doing good, of improving our world, if only in tiny parts, by chemical means. The most common way in chemistry is by making new substances that can be employed for some improvements of our living conditions. However, what counts as improvements heavily depends on cultural values and life styles, such that not every change is welcomed by everyone. In addition, material improvements frequently come with downsides, advert consequences that were neither foreseen nor intended. The naive good will of doing good, without considering both possible advert consequences and cultural values, is destined to moral failure. There is no moral duty of improving by all means because that is wrong by all ethical theories.

Another way of doing good has been highlighted in this paper: doing good by foreseeing and warning of possible harm, for which a moral duty can indeed be derived from the duty to rescue. What counts as harm, in particular harm to health, is less controversial across cultures and life styles than improvements. Moreover, the prediction and warning of possible harm leaves it up to those affected by the possible harm to choose their way of dealing with the issue. The options to choose from frequently include changes of habits and changes of technology, or a combination of both. In the case of CFC aerosols, many people first avoided spray cans and returned to pump systems, i.e., they changed their habits even before the ban. Shortly later, various substitutes of CFCs were introduced as propellants, i.e., a change of technology, but some of the substitutes turned out to have some ozone depletion potential as well. If people are again informed about the possible risks of alternative options, they can make their own responsible choice.

Doing good by material improvements and doing good by researching possible hazards imply different research styles. The traditional focus in chemistry has been on studying how to make things in the laboratory. In contrast, researching possible hazards requires the study of open systems that usually transcends disciplinary boundaries and is thus intellectually more demanding. Although a growing number of chemists have engaged in that kind of research since the 1960s, they are still a minor part, and marginal in the dominant self-image of chemistry, despite the extraordinary societal impact of works such as that by Molina and Rowland. The overall lesson from this paper is thus: chemist who want to do good by any ethical standards should focus on, or should at least keep in mind at any time, scientific hazard foresight, because that is what they are morally required to do, before making new things.

In the ideal moral world, those who make things and those who research possible hazards work hand in hand from the beginning. Together they can do good by making improvements from which most risks have been eliminated long before the products come to the market.

Acknowledgment

I am grateful to Tom Børsen and two anonymous referees for helpful suggestions.

Further Reading

The story of CFCs and stratospheric ozone depletion has been told from various angles, including epistemological (Christie 2000), political (Parson 2003), and environmentalist (Dotto & Schiff 1978, Roan 1989).

Notes

[1] Replacing Cl with NO in equations (6)-(7) yields the core mechanism for ozone depletion by NO as suggested by Crutzen (1970).

[2] Natural difficulties included accounting for the solar cycle and the impact of volcano eruptions.

[3] The main results were already made public in 1988 (Kerr 1988).

[4] The paper (Farman et al. 1985) was received 24 December 1984, accepted: 28 March 1985, and published: 16 May 1985.

References

Alternative Fluorocarbons Environmental Acceptability Study (AFEAS): 1993, Production, Sales and Atmospheric Release of Fluorocarbons Through 1992. Technical report, AFEAS, Washington, DC. [available online: http://www.ciesin.org/docs/011-423/toc.html, accessed 24 October 2019].

Andrew H.; Knoll, A.H. & Nowak, M.A.: 2017, ‘The timetable of evolution’, Sciences Advances, 3(5): e1603076 [available online: https://www.ncbi.nlm.nih.gov/ pmc/articles/PMC5435417/, accessed 24 October 2019].

Auffhammer, M.; Morzuch, B.J. & Stranlund, J.K.: 2005, ‘Production of chlorofluorocarbons in anticipation of the Montreal Protocol’, Environmental and Resource Economics, 30(4), 377-391 [available online: http://scholarworks. umass.edu resec_faculty_pubs/188, accessed 24 October 2019].

Børsen, T. & Nielsen, S.N.: 2017, ‘Applying an Ethical Judgment Model to the Case of DDT’, Hyle: International Journal for Philosophy of Chemistry, 23(1), 5-27.

Christie, M.: 2000, The Ozone Layer. A Philosophy of Science Perspective, Cambridge: Cambridge University Press.

Conway, E.M.: 2008, Atmospheric Science at NASA: A History, Baltimore: John Hopkins UP.

Crutzen, P.: 1970, ‘The influence of nitrogen oxides on the atmospheric content’, Quarterly Journal of the Royal Meteorological Society, 96, 320-325.

Dotto, L. & Schiff, H.: 1978, The Ozone War, New York: Doubleday.

Eckerman, I. & Børsen, T.: 2018, ‘Corporate and Governmental Responsibilities for Preventing Chemical Disasters: Lessons from Bhopal’, Hyle: International Journal for Philosophy of Chemistry, 24(1), 29-53.

Farman, J.C.; Gardiner, B.G. & Shanklin, J.D.: 1985, ‘Large losses of total ozone in Antarctica reveal seasonal Cl0x/NOx interaction’, Nature, 315, 207-210.

Holland, H.D.: 2006, ‘The oxygenation of the atmosphere and oceans’, Philosophical Transactions of the Royal Society B, 361(1470), 903-915. [available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1578726/, accessed 24 October 2019].

Johnston, H.: 1971, ‘Reduction of stratospheric ozone by nitrogen oxide catalysts from supersonic transport exhaust’, Science, 173, 517-522.

Kauffman, G.B.: 1955, ‘Frederic Swarts: Pioneer in organic fluorine chemistry’, Journal of Chemical Education, 32, 301-305.

Kerr, R.A.: 1988, ‘Stratospheric Ozone is Decreasing’, Science, 239, 1489-1491.

Kunkle, G.C.: 1995, ‘New Challenge or the Past Revisited? The Office of Technology Assessment in Historical Context’, Technology in Society, 17(2), 175-196. [available online: https://ota.fas.org/technology_assessment_and_congress/ kunkle/, accessed 24 October 2019].

Llored, J.-P.: 2017, ‘Ethics and Chemical Regulation: The Case of REACH’, Hyle: International Journal for Philosophy of Chemistry, 23(1), 81-104.

Lovelock, J.E.: 1974, ‘Atmospheric halocarbons and stratospheric ozone’, Nature, 252, 292-294.

Lovelock, J.E.: 2000, Homage to Gaia: The Life Of An Independent Scientis, Oxford: Oxford UP.

Lovelock, J.E.; Maggs, R.J. & Wade, R.J.: 1973, ‘Halogenated Hydrocarbons in and over the Atlantic’, Nature, 241, 194-196.

Molina, M.J. & Rowland, F.S: 1974, ‘Stratospheric sink for chlorofluoromethanes: chlorine atom-catalysed destruction of ozone’, Nature, 249, 810-812.

Montzka, S.A. et al.: 2018, ‘An unexpected and persistent increase in global emissions of ozone-depleting CFC-11’, Nature, 557, 413-417.

Morris, P.J.T.: 2002, ‘"Parts per Trillion is a Fairy Tale": The Development of the Electron Capture Detector and its Impact on the Monitoring of DDT’, in: P.J.T. Morris (ed.): From Classical to Modern Chemistry: The Instrumental Revolution, Cambridge: Royal Society of Chemistry, pp. 259-284.

Oreskes, Naomi & Conway, E.M.: 2010, Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. New York et al.: Bloomsbury.

Parson, E.A.: 2003, Protecting the Ozone Layer: Science and Strategy, Oxford UP.

Petsonk, C.A.: 1990, ‘The Role of the United Nations Environment Programme (UNEP) in the Development of International Environmental Law’, American University International Law Review, 5(2), 351-391.

Roan, Sharon: 1989: Ozone Crisis: The 15-Year Evolution of a Sudden Global Emergency, New York et al: Wiley.

Ruthenberg, K.: 2016, ‘About the Futile Dream of an Entirely Riskless and Fully Effective Remedy: Thalidomide’, Hyle: International Journal for Philosophy of Chemistry, 22(1), 55-77.

Singer, P.: (1997): ‘The Drowning Child and the Expanding Circle’, New Internationalist, 5 April [available online: https://newint.org/features/1997/04/05/peter-singer-drowning-child-new-internationalist, accessed 24 October 2019].

Smith, B.: 1998, ‘Ethics of Du Pont’s CFC Strategy 1975-1995, Journal of Business Ethics, 17(5), 557-568.

Stolarski, R. et al.: 1992, ‘Measured Trends in Stratospheric Ozone’, Science, 256(5055), 342-349.

World Meteorological Organization (WMO): 1990, ‘Report of the International Ozone Trends Panel, 1988’, Geneva [available online: https://www.esrl.noaa.gov/csd/assessments/ozone/1988/report.html, accessed 24 October 2019].

Joachim Schummer:

Berlin, Germany & La Palma, Spain; js@hyle.org